Panasonic Q&A Part I: Why no long-telephoto lenses, and Depth From Defocus details

posted Thursday, June 5, 2014 at 7:45 AM EDT

I met with a number of camera company executives at the CP+ show in Yokohama, Japan this past February, and have already posted transcripts of a number of those interviews. (In the rough order in which I've posted them: Nikon, Canon, Ricoh / Pentax, Fujifilm, Sigma, Foveon, and an earlier Panasonic Q&A about onion-ring bokeh.) The press of everyday work once back home has meant that I still haven't managed to finish posting some of the longer exchanges, though.

I'll be trying to get some more of my material from that trip onto the site over the next few weeks, but here's a first down payment on what's still owing: The first part of a two-part, extensive discussion I had with two Panasonic representatives, Mr. Yoshiyuki Inoue and Mr. Michiharu Uematsu. Inoue-san is a very senior product planner for Panasonic, and Uematsu-san plays a very active role in communicating about Panasonic's new products with the press and Panasonic operating companies worldwide, as well as carrying product feedback to the planners and engineers in Japan.

I've known both gentlemen for a number of years now; regular readers will recognize both their names from previous interviews. The three of us found a little café far from the show floor, and spent some time discussing Panasonic's products and technology. "Some time" is a bit of an understatement, though; it ended up being an hour-and-a-half conversation covering a wide range of topics, including a number of reader questions and suggestions for firmware changes.

At almost 9,000 words, the full interview is way too long for a single news item, which is why I've split it into two parts -- this one, and a second part which will follow in a few days. We started off talking about one of my favorite cameras, the Panasonic GM1. In the transcript below, I'm "DE", and the other two gentlemen are identified by their last names.

How is the Panasonic GM1 doing in the market?

Dave Etchells/Imaging Resource: We gave the Panasonic GM1 an award in 2013, as the best compact interchangeable-lens camera. There really wasn't anything else in that category then, and it's been extremely popular with our readers. How has it been received by the market in general? Is it meeting your expectations?

Yoshiyuki Inoue/Panasonic: The GM1? In the US or worldwide?

DE: I guess both in the US and worldwide.

Inoue: As you can probably understand, it's very popular in East Asia. It's an interesting topic: In France, it's very popular, I think because it's very fashionable. But that's only France. Germany is not so popular.

Michiharu Uematsu/Panasonic: Actually, almost all the European countries. In Italy it's very well accepted. In France, though, even before the GM1, the GF-series was very well accepted. This is a very special phenomena in France. For example, in Germany, they prefer the G or GH. And also in the UK, just the G.

DE: Very interesting, so in most of the Euro zone, they prefer the bigger cameras?

Uematsu: Yes, the French prefer a unique product, and also smaller.

DE: How about the US, how is it being accepted there?

Inoue: So-so. [laughs] But it's accepted as a second camera for professionals.

DE: That's interesting because, even though I'm an enthusiast photographer, if I was buying a new camera right now, that's probably the one that I'd buy. Because it's so compact, and also because the Micro Four Thirds system has a lot of good lenses available for it. I find it appealing because it actually will fit in my pocket. I'm a little surprised that it's not more popular everywhere.

Roadmap for super-compact lenses for the GM1?

DE: The GM1 has an extremely compact kit lens, can you say anything about other super-compact lenses? At CES, you had mockups of a couple of very compact lenses under glass that I don't think have been released yet.

Uematsu: The 15mm...

DE: I think there was a very small zoom also.

Inoue: ...and the 35-100mm. It's almost the same size as our current 14-42mm.

DE: Can you say anything about when those might come out, or is it still unspecified?

Inoue: We cannot say. [laughs]

DE: So there's no official roadmap with timing for these yet.

Inoue: No, but maybe coming soon.

[Ed. Note: The 15mm was officially released on March 24. The compact 35-100mm -- distinct from the 35-100mm f/2.8 that's been on the market for a while -- still hasn't been released. From the mockup I saw at CES though, as shown in the photo above, it's impressively small.]

Reader question: What's up with long telephotos and a long tele-zoom lens?

DE: Also on the topic of lenses, a reader said that he really likes the Micro Four Thirds system very much, but is wondering about the lens roadmap. I don't know what you've published publicly, but are there any plans for a macro lens -- say a 70mm, 80mm, or 100mm? Or what about a really long tele-zoom? I'm not sure what you can talk about, but is there any indication you could give in those areas?

Inoue: Hmm, very difficult to say... We are still considering the lens roadmap here [in Japan], and worldwide people's opinion. At this time, we have already pre-released info about the 15mm and 35-100mm, and we have another idea for the lens roadmap, but we can't disclose it at this time, I'm sorry. But we've decided that a long telephoto lens has already been canceled, unfortunately.

DE: So you had been considering it, but decided there wasn't enough market for it?

Inoue: Yes, we had been considering, but looking at the overall situation, what it would take for development and what sales there would be, we decided to cancel the long telephoto zoom. We had already released 150mm, but for the zoom, we are very sorry, but this was our decision.

DE: This is a very related question, it might be what you were just talking about. A reader asked why you removed the 150mm f/2.8 from your roadmap. Are you shifting your focus to shorter focal lengths and GM1 lenses?

Inoue: Yes. Before announcing the GM1, we had to prepare lenses for the GM design, so we concentrated our lens development on that side. On the other side, though, we have to consider the GH and some somewhat bigger lenses. Also, Olympus has released some good lenses, so we have to compete. That is one new important point! [laughs]

DE: That's interesting, so you are having to devote resources to making small lenses for the GM1, but also need to develop more video-capable lenses for the GH4.

Inoue: Yes, yes. One example of our concentration is that the 42.5mm Leica lens is capable of video shooting. Although it is a Leica lens and the former Leica lenses were not video-capable, this one should be able to be used for video.

DE: So when you developed the Nocticron, you had the GH4 in mind for it?

Inoue: Yes, yes, that was a very important part of it.

DE: The very bright aperture, the very shallow depth of field is very important for videographers...

Inoue: Yes, exactly. When Uematsu-san visited your office, he mentioned DFD technology is very, very important not only for still shooting, but also for movie recording. With DFD, autofocus can become very, very smooth for video shooting. This is very important for our GH series. [Ed. Note: DFD stands for "Depth From Defocus", a focusing technology that Panasonic introduced with the GH4. In a nutshell, it uses detailed understanding of the lens' bokeh characteristics to determine how much the subject is out of focus, and in which direction. Previously, only phase-detect AF systems could provide this sort of quantitative focus information.]

How does Depth From Defocus work?

DE: Actually, that leads right into some questions I had about DFD. Yes, when Uematsu-san visited us, one of the points he made for DFD was that it would show less hunting, or at least that the GH4 would show less hunting. Is DFD part of the reduced hunting with the GH4? Could you explain a little bit how DFD reduces hunting?

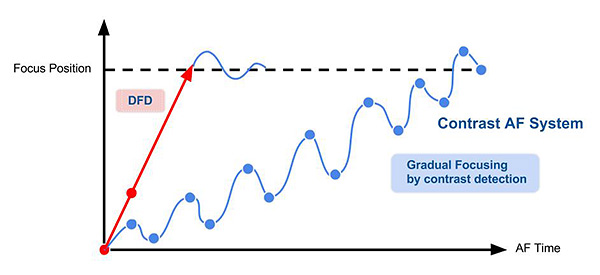

Uematsu: In contrast-detect AF, in order to determine the best focus point, the lens has to scan over the peak focus point. After it's passed the peak point, then the camera knows where the peak focus was. However, with DFD, of course it has very small hunting, but it's possible to create a graph or prediction of the focus point, and move quickly there. Then we use contrast detect to make small adjustments, but it's only across a very small distance. The hunting is very, very small.

DE: Does DFD help the camera know that it's very close to the best focus point, so it's less likely to or doesn't need to step past it, or makes it able to distinguish a very small amount of focus shift?

Uematsu: Actually, using DFD, it's possible to get very quickly to the rough distance, the camera can make a very fast movement to get very close, and from there it uses contrast AF. Almost the same as phase differential plus contrast.

DE: I understand, but when it gets close to the focus point, the DFD system doesn't have to move as much to determine exactly where the peak is, or is it pretty much conventional contrast-detect at that point?

Inoue: For example, if we're calculating distance using the bokeh and the lens profile, and it for instance comes out to be two meters, the camera could move very quickly to maybe two point something. Then only the last two centimeters or something would be adjusted with contrast detect.

DE: Ah, so that's about the order of magnitude, then: If the camera detects that the subject is two meters away, the amount of fine-tuning required after the rapid motion might only be from 2.0 to 2.1 or 1.9, so it's hunting over a much smaller range.

Inoue: Yes. This behavior, with the AF motion, is almost the same as the current phase detection error, it's almost the same. [Ed. Note: I think he was saying that the basic accuracy of the purely-DFD part of the cycle is very equivalent to what typical phase detect systems achieve currently]

Uematsu: Conventionally, if lenses are focused here, with contrast detect, they're moving like this, and after peak point, the camera knows that it's gone past the peak point, it moves like this, in the opposite direction, in this portion, it makes some hunting. However, with DFD, the camera knows already it's around here, between two to three meters, and nearby the final target, the camera uses contrast detect.

DE: So it doesn't overshoot as much?

Uematsu: Yes, of course it has some overshoot, but very, very small.

DE: So when the lens is in focus, regular contrast detect would have to keep hunting a little bit, but with DFD, I guess it knows it's already at the best point of best focus? Or, if the subject moves a little, and the camera sees the contrast drop a little, does it maybe at that point... I'm trying to see how DFD helps with tracking or maintaining focus.

Inoue: In contrast AF, for example, when in AF continuous mode, there is some wobbling [hunting], but in this system, there is no need for wobbling. If the subject moves, we can understand with the bokeh level if it's far or near.

DE: Ah - so you can tell from the bokeh whether the subject has moved far or near?

Both: Yes, yes.

DE: Ah, that's interesting - because the bokeh is different on both sides of best focus.

Inoue: Yes - it's very, very easy, if the subject moves, it's very easy to understand which direction the subject has moved.

DE: That's very interesting, because that's exactly what phase-detect does.

Inoue: Yes, yes. Almost the same. But because it needs a lens profile for the bokeh level, this is only for our G-lenses, that are in the database inside the body. After that, we will build the data into the lenses.

DE: Yes, so new lenses will have their bokeh information in their firmware.

Inoue: Yes, so it will not be available for other Micro Four Thirds lenses, lenses from Olympus or Sigma will not be able to use the DFD technology.

DE: At some point will you open up that specification, or is that something that will stay proprietary for Panasonic?

Inoue: The database and the structure of the data is very, very important. So Olympus does not have such data in their lens, so we can't understand about the bokeh level.

DE: I was wondering whether you would ever tell them what that data structure is. It sounds like not, though -- that's something you'll keep as a unique Panasonic advantage.

Both: Yes.

Uematsu: Just in focus and bokeh, edges of course. However, if we defocus some we have some bokeh, like this. And the lens profile, actually, if the subject is located at two meters, bokeh is zero, then one meter, four meters, same bokeh will be got, then just using two images, it's possible to get the direction. For example, if camera gets this kind of bokeh, in that case it knows it should be one meter or four meters. Compare with the focusing, a little bit it moves, we have another ... it means this portion, too, it gets this direction or this direction, focus should be moved. Then in this area, we use contrast AF to make the precise point..

DE: So basically, in continuous focus, the camera is continuously looking at the subject, and taking successive pictures of the subject, and by seeing the nature of the changes between successive images, it can tell whether it's coming closer or going further away.

Uematsu: Yes.

DE: That's interesting! It must take a lot of processing, I'd think, because you have to look at a larger area around each point to be able to tell just what's happening.

Inoue: It's very well-known, however this is the first time to introduce it into an actual product.

DE: So it's been well known how to do this, that people have understood for a long time that bokeh changes and is different from near to far, but this...

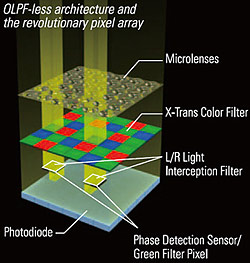

Inoue: This technology is one of the reasons why we didn't adopt phase-detection on the sensor. We have already considered for four years past study.

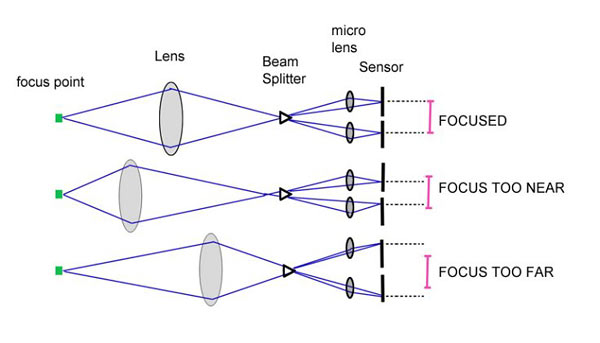

[Some background on how on-chip phase-detect AF works, for the discussion below: On-chip phase detect autofocus generally requires that some of the imager's pixels be "shaded", to only see light coming from either the left or right side of the lens. This mimics the action of the beam-splitter prism and separate detectors in a conventional phase-detect AF system. For those interested in the details, Roger Cicala of LensRentals.com has written an excellent, in-depth explanation of how autofocus systems work. For now, though, all you need to know is that phase-detect AF requires that at least some pixels only see light from one side of the lens or the other.

The problem, of course, is that if you shade a pixel so it only sees the light coming from half of the lens' light cone, it's only going to be receiving half as much light as unshaded ones. If some pixels are shaded and some are not, the camera's processor has to somehow compensate for the difference in light levels the two groups are seeing. This can take processing time and possibly increase noise or other artifacts. Canon has some proprietary technology that apparently avoids this problem in its Dual-Pixel AF system, first seen in the 70D, but as far as I know, all other manufacturers have to resort to shading pixels for their on-chip AF, and so incur the time and potential image quality penalties associated with this. Even Canon's approach may not be without tradeoffs, as many people found the 70D's high-ISO noise performance a little disappointing when the camera first debuted.]

Downsides of on-chip phase-detect AF, especially for 4K video

DE: So you've been developing DFD for four years now, and seeing that as a possibility, you realized that you didn't need to do phase-detect on-sensor.

Inoue: Yes, yes. One of the disadvantages of phase-detect on the sensor is you have at least some bad pixels.

Uematsu: At least some inferior pixels

DE: Oh, yes, meaning that if you have some pixels that are shaded to provide the phase-detect function...

Uematsu: Yes. Of course, with compensation they are very hard to find, however for Fuji, they say they have more than 100,000 pixels that they use for autofocus. It's nearly 1% of the total pixels.

DE: 1% of the pixels, and they have to do compensation of some sort, for all those pixels.

Uematsu: Maybe 0.5% of pixels.

Inoue: And this one has 4K video output, so we cannot ignore that many pixels on the sensor's surface.

DE: And with 4K data going through, you don't have time to swap-out or compensate for individual pixels with lower output.

Inoue: Yes, it's a very very complicated structure.

DE: Yeah, I guess that's one thing with Canon's Dual-Pixel AF, is that all pixels have two halves to them, so they don't have to compensate, then can just read them out separately. But I've noticed that it's not terribly fast, though. If you're using the on-chip phase detect, if you're shooting still pictures, it's maybe a half second of lag time to do the focusing and take the picture. What is the spec for the GH4, do you have one for how fast it can achieve focus, or how fast it can recognize that it's already in focus?

Inoue: Basically for these, 0.07 second, but this is under development, so it may be faster in production.

DFD isn't just about speed, it's about a higher percentage of focus success as well

DE: And this is basically how fast the camera can determine it's in focus, not if it has to move the lens a long way. If it's basically in focus, that's how quickly it can figure out "Ah, I'm in focus."

Uematsu: It's not just speed. Actually, the point is that the focus failure rate is much, much lower than before. With contrast AF, the speed depends on contrast and also on the lighting conditions, and sometimes it has a failure. But with DFD, the failure rate will be much, much lower than before.

DE: That's interesting. Of course, it depends entirely on the situation, but I wonder if there's any way to quantify that; is it half as often, a third as often? Can you say?

Uematsu: We don't have exact data.

DE: So you don't have an exact number for that, but you've observed that under... Like if you have a black and white pattern under bright light, you can focus all the time, but maybe in more challenging situations, with lower contrast and dimmer lighting, you have much fewer focus failures.

Uematsu: Maybe you have more know-how, for performing such an evaluation! [everyone laughs]

Inoue: I know for Sony and their A6000, they mentioned a very high AF speed, 0.06 second. Our first-announced data is only 0.07 second, so we have to continue to develop until the official release.

"Scoping only the AF speed is nonsense for an actual photographer"

Uematsu: Actually, it's not just the focusing, but after detection, we have to do the shutter release, we have to close and open the shutter again, it has more delay.

DE: Ah, so there's more delay after it determines focus.

Uematsu: Yes, just discussing AF time isn't so meaningful, I think. [Ed. Note: This agrees with our own findings in the lab. A camera may be able to determine focus in 0.06 seconds, but it may not be able to actually capture an image in twice that time.]

Inoue: Scoping only the AF speed is nonsense for an actual photographer.

DE: Focusing just on the AF speed doesn't really mean that much to real-world photographers. The photographer can't measure that or even be aware of that, all he or she sees is the total shutter lag. It seems like this is another specsmanship area like megapixel count, where the numbers may not really be meaningful to the end user. I'll be interested to test that and see what we find.

Will DFD come to lower-end camera models as well?

DE: Because it requires more processing, will we see the DFD technology that was developed for the GH4 brought to lower-end models as well, going forward? Will the GF-series or the G-series have that capability, or does it just require too much processing power?

Inoue: This one I think is maybe adopted for the future product. Because it depends on the power of the LSI. If they adopt the LSI from the GH4, it will be...

DE: If they use the same LSI, the same processor, lower-end models can have DFD ability. I'm wondering, is that processor more expensive, or does it take more power, such that it wouldn't fit in a cheaper or smaller camera?

Inoue: Not so, maybe.

Uematsu: The point is, just before capturing an image, basically, the processor is not so busy. Once the shutter button is pressed, the capturing will start, and also the JPEG compression. That means very much processor work. However, just before, not so much work, actually.

DE: Ah, that's interesting. So even though it has this very sophisticated AF algorithm to run, that's very little processing compared to the JPEG compression and other work the CPU has to do to create the final images.

Both: Yes, yes.

Uematsu: At least for most still pictures. Technically, not only for the G-series, but also in compact cameras, it's possible to introduce this.

Inoue: Compact cameras are actually easier, because they don't have so many lenses to deal with. There's just one lens, so it's simpler.

DE: Ah, so this technology and focus speed and smoothness could very easily come to compact cameras as well. You said it's a matter of adopting the same LSI. From what you just said about processor load, though, it sounds like it's not so much a matter of having a much more powerful processor, as it is just having a different architecture, that would support DFD, how the internal components are arranged.

Inoue: Yes.

DE: Panasonic was really the leader in very fast contrast-detect AF from the beginning, you guys were doing 0.1 or 0.15 second when everyone else doing 0.4 or something. [laughter]

Inoue: We didn't stop, we kept concentrating on AF speed, shortening the time lag.

Does DFD rely on CA to determine out-of-focus amount?

DE: I'm curious, but I'm not sure if you can answer this or not. One thing that would help you to determine the amount and direction of out-of-focus would be the chromatic aberration, I guess it's spherical aberration of the lens. In one direction, you get a green fringe, in the other direction you get a pink fringe. I guess that's part of the bokeh information you get about the lens.

Inoue: That's a demonstration. You can understand easier, if you think about the color. But on that time, in the camera processing, it's not the color.

DE: So that's an easy thing to think of to understand how it works, but that's not how the camera is actually doing it. Yeah I figured that you'd try to design your lenses not to have spherical aberration, but I asked that because of another technology I'm aware of.

They didn't tell me this, but I'm guessing that DxO's infinite depth of field technology is based in part on using longitudinal chromatic aberration to determine how much de-blurring is applied to different parts of the image. They were aiming that technology at smartphone makers. What they did tell me was that if you made a lens with the right kind of distortion, it made it easier for the algorithm to figure out how to process it.

In any event, it sounds like it might be somewhat the same principle here, in that you're using a detailed understanding of the lens' bokeh characteristics to determine how much out of focus it is.

Can DFD be brought to older models via a firmware update?

DE: And there was a reader question, I'm pretty sure the answer is no, but he's asking if it's possible to implement DFD in previous models by a firmware upgrade. It sounds, though, like it's dependent on having the LSI that's in the GH4. For instance, could you do a firmware upgrade to a G6, to give it DFD capability?

Inoue: No, it depends on the structure of the LSI. Unfortunately...

Uematsu: Actually, the processing engine is different. We use the Venus Engine IX in the GH4. Previously it was the Venus Engine VIII, or Venus Engine VII. It's much different.

DE: OK, so it was the Venus Engine VII, and now the GH4 is all the way up to version IX.

Uematsu: Yes, it's not only software, but the internal hardware also.

Inoue: It has to calculate very complicated data. For example, lens bokeh data. [laughs, apparently the lens bokeh data is particularly complex]

DE: Yes, I'm sure that requires a lot of processing. Even though you noted that the processor has more time before the shutter is pressed, that's still a lot of processing to have to deal with. Although, talking about the processor being busy, that makes me think that the processor must be very busy during video - but you can still do DFD processing then?

Inoue: Yeah.

DE: I guess it's probably a whole separate part of the processor, because the video compression is probably a whole dedicated circuit.

Uematsu: Actually, we have four cores.

DE: Four cores?

Uematsu: Yes, four cores in the [current-generation] Venus Engine.

DE: So even when you're running video, you're not using all the cores?

Inoue: Yes, when just processing still pictures, some of the cores don't work [aren't used], it's not so heavy a load.

DE: So the processing to get the image into a JPEG, you're limited by the card transfer speed, rather than the processing? Could you decrease the shot-to-shot time or the buffer emptying time by using more cores, or is there really no point in using more cores to process the JPEGs, because the card won't accept data any faster?

Uematsu: The details we don't know, only the software engineers.

End of Part I - Stay tuned for more

That's it for Part I, stay tuned for Part II in a few days' time. In Part II, we'll talk about the GH4's sensor speed and why it doesn't have the in-body image stabilization system that the GX7 had, why 4K video is important for still photographers as well as videographers, and take up a number of reader questions and comments -- including some reader suggestions for firmware changes that I think will actually be implemented at some point!

Meanwhile, feel free to ask questions about any of the above in the comments below & I'll try to answer to the best of my ability. Let me know if I didn't explain something sufficently, and I'll be happy to elaborate.