Say goodbye? Full-frame DSLRs gone by 2025 claims Dr. Rajiv Laroia, imaging startup Light

posted Monday, April 27, 2015 at 3:40 PM EST

Will full-frame DSLRs be a thing of the past, 10 years hence? "Never" is a perilous word when it comes to technology, but full-frame SLRs gone, really? Ten years certainly seems like a bit of a reach, but that's exactly what Dr. Rajiv Laroia, the Chief Technical Officer of imaging startup company Light predicted, when I spoke with him recently. (I'm sure our readers will weigh in with their own thoughts in the comments below ;-)

We told you about Light and their unique multi-lens/sensor camera technology a bit over a week ago, now we're back with all sorts of juicy technical details about how their technology works, fresh from an interview with Rajiv and his co-founder and CEO Dave Grannan. It was an interesting conversation, to say the least.

The photo industry has certainly seen its share of bold technical claims that fail to pan out, but Messrs. Laroia and Grannan have extremely impressive resumes, and well-proven track records of bringing cutting-edge technology to market in successful products. Dave Grannan has been around the Mobile space for years, with previous stints at Nokia, Sprint, and Geoworks, and as CEO of Vlingo, whose speech recognition technology was behind the first Siri app and Samsung's S Voice offering. Then there's Rajiv Laroia. If you use a cell phone, you probably use technology Rajiv pioneered every day: His former company Flarion Technologies was the developer of 4G/LTE cell phone technology. (Yes, that LTE). Qualcomm bought Flarion in 2006 for $600MM to acquire both their patents and their tech team, Rajiv foremost among them. These days, he can clearly afford any camera/lens he wants, but decided to revolutionize the photography business instead.

There are bound to be some technical and practical catches in there somewhere (see the bottom of this article for a couple that come to mind), but I have to admit being awfully impressed with what I heard. I don't know if Light's technology will be able to deliver the DSLR image quality they claim it's capable of, but it seems clear that it could at shift the phone/camera divide a lot further in the phone direction.

Read on for a digest of our nearly 40-minute conversation:

Light's tech detailed: A Q&A with CTO Dr. Rajiv Laroia and CEO Dave Grannan

Dave Etchells/Imaging Resource: I'd be interested the hear the history of Light. How did you, in fact, start it? What led to it, how did you guys get involved, et cetera?

Co-founder and CEO, Light

Dave Grannan/Light: Sure. My background is primarily in the mobile and wireless industries, and handsets. So my last company was a startup called Vlingo that did speech recognition for mobile. You're familiar, I'm sure, with products like Siri. Before Siri was bought by Apple, that was our speech recognition they were using.

DE: Wow, really?

DG: Yeah. We kind of gave voice to that. And then before that, we also made products, like the Samsung Galaxy phones have a product called S Voice, which is their competitor to Siri, and that was built by my company, Vlingo.

Before that I was at Nokia, and then before that another startup in the mobile space. And so we sold that company; it was bought by Nuance back in 2012, and I spent a year kind of, you know, doing my indentured servitude as people do.

And as my handcuffs were coming off, I was working with one of my prior investors at Charles River Ventures, and kicking around some ideas. And they said, 'Well gee, Dave. We like you, and we like your ideas a lot, but we've got this other guy, and we like his ideas better.' That other guy was Rajiv, and it turns out that CRV was an investor in his last company as well.

That's when I met Rajiv, and he had a fantastic idea around photography that we decided to partner on. So Rajiv, maybe you could introduce yourself?

Co-founder and CTO, Light

Rajiv Laroia/Light: Yeah. My previous company was a company called Flarion Technologies. We started in 2000, developing what we called 3G wireless technology. Along the way, there was some "grade inflation", and we became 4G. So LTE technology was all developed by my company, my startup. We had all of the key intellectual property in LTE, we displaced all of Qualcomm's technology. So Qualcomm, which had controlling intellectual property in 3G, didn't have anything in 4G. We had all of the controlling intellectual property in 4G. So Qualcomm ended up acquiring Flarion for a lot of money, for our intellectual property basically.

After the acquisition, I spent five years at Qualcomm. And after I got out of Qualcomm... I am an amateur photographer. I have a bunch of L-series lenses from Canon, expensive cameras, and I spent a great deal of money to take a lot of pictures, until the phone cameras started to get better. Then I started taking less and less pictures with my big one, if you will, and now I only take occasional pictures [with my DSLR] when I [specifically] plan to take pictures. Most of the other pictures are mostly on my iPhone now. But that was always very frustrating for me, that my really high quality lenses and cameras were not being used because they're very difficult to carry.

That's when I started looking into the possibility of producing cameras that had the same quality and features, but were much, much easier to carry, they were much smaller. That's when I started developing this technology, and along the way met Dave, and we started the company.

DE: Cool. And when was that? Dave was tied up with Nuance until 2013; how far back did you start formulating these ideas, Rajiv?

RL: Well, my company was acquired in 2006. I left Qualcomm in 2011. And basically in 2012, I started formulating these ideas.

DE: Okay. So it really was a photography focus from the beginning. In some of my questions, and the limited information I've seen on your tech, it seems like there are a number of other potential application areas, but your motivation initially was really coming from a photography point of view?

RL: Yes.

DE: Interesting. So you've just drawn back the veil a little bit now. Are you planning to manufacture your own devices, or is this something that would be licensed to cellphone companies? What do you see as your path from here?

DG: We're designing and manufacturing components for other customers. And then at some level, for some customers, it will be a complete intellectual property licensing kind of arrangement, because they might be of a size and scale where they would want to do their own manufacturing of components, and things like that.

As you probably saw, we're really starting with the photography angle, from the smartphone space. Rajiv's original problem is; I want to take a picture with what I have that's in my pocket, but I want that picture to be DSLR quality. His wife got upset 'cause he tore three or four pants trying to shove great big lenses in 'em. [laughs] And so he decided, rather than continuing to rip his pants, he would try to design something that could fit in a smartphone.

DE: Got it. Okay, so you're not planning on becoming the next Canon or Nikon, but this will be something that would hopefully be licensed to all sorts of folks like Samsung, and Apple, and whoever.

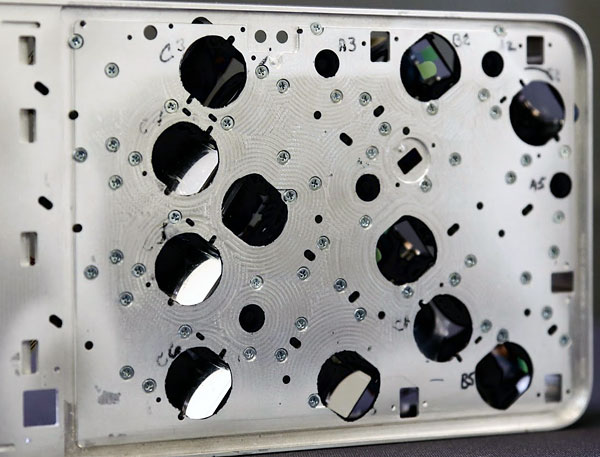

Now, it does go a little bit contrary to the current trend of getting things thinner and thinner and thinner. I think that what we saw was that you figure it'll be about as thick as a current cellphone in a case, but then of course, you'd still want to put a case on it, making it thicker again. Have you had any focus groups or interactions with potential consumers about the size? And what's your sense of where it fits in the marketplace as a result?

DG: Sure. So we do a lot of usability studying with some of the cosmetic models that you've seen in some of the pictures I think we've shared, trying to judge consumer feedback. The current thickness, among photography enthusiasts, is not a barrier at all. People appreciate the size of the device. We know we can fit into the XY footprint of something about the size of an iPhone 6 Plus. And as you rightly said, the thickness, the Z-height might be the iPhone 6 Plus with a case on it.

Among photography enthusiasts for a first generation product, we find a lot of appreciation for something that still is very portable. And what they care about, far more than four or five millimeters of thickness, is the ability to do true optical zoom, and have great bokeh and depth of field and low-light performance. To get that, that's kind of a no-brainer. Having said that, there's several technologies that will drive what we're doing to even thinner form factors over the next couple of generations of technology.

DE: Yeah. I understand that for the photography enthusiast, it's not the size of any interchangeable lens camera. It's a good bit smaller, and it's thinner than any of the high-end point and shoots, like the Sony RX-series, Canon G7X or the Panasonic LX100, that sort of thing. The XY footprint is about the size of an iPhone 6 Plus, so it's large in that dimension as well, but you can still fit it into a pocket.

DG: Yeah.

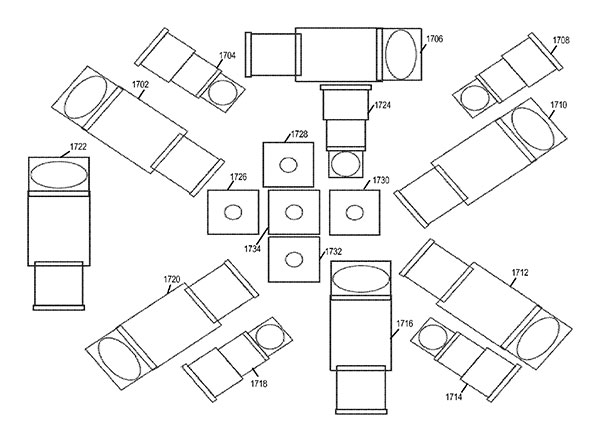

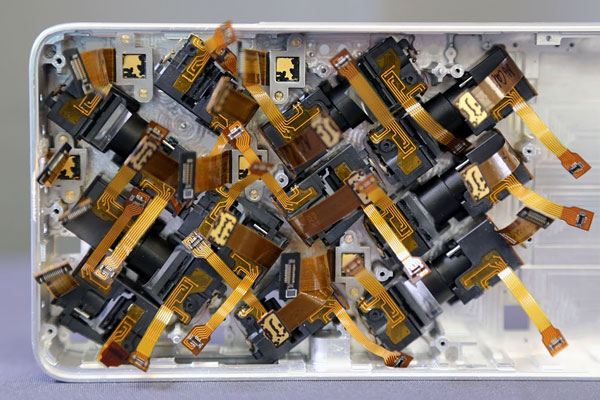

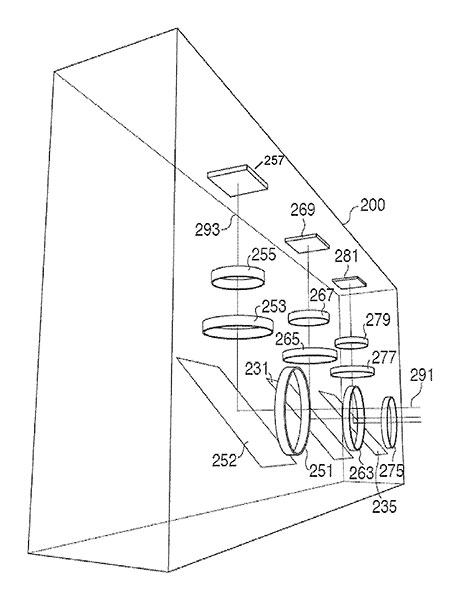

DE: The unit that you showed had -- was it 16 camera modules in it? Is that a fixed number, or is that variable depending on the application?

RL: It's a variable number. We picked that number for certain qualities that we were targeting, and a certain zoom range.

DE: Got it.

RL: But it's a not a number cast in stone. For a different zoom range, you could have more or less modules in it.

DE: Got it, so it's scalable. And that particular unit, there's three focal lengths in that array. How many modules per focal length, because three doesn't divide evenly into 16?

RL: There are five modules at 35mm-equivalent focal length, five at 70mm-equivalent focal length, and six at 150mm-equivalent.

DE: Okay, interesting. And within those modules, how does the chip size compare to those in conventional cell phones? Is it roughly similar?

RL: It's the same chip [size] that conventional cell phones use. All of them use the same sensor, which is also used like cell phones, so we can leverage the cost curve for those sensors based on the volume of cell phones.

DE: Right. So the design of the individual modules is reminiscent of some of the very thin conventional cameras, in that it's reflex optics. There's either a mirror or prism at one end, and then the barrel -- the length of the optics and sensor assembly -- is parallel to the front panel. And I saw that the mirror was moveable. I'm curious about what use you make of the moveable capability. Is it for alignment for stitching purposes, or for panning? Can it be used for IS?

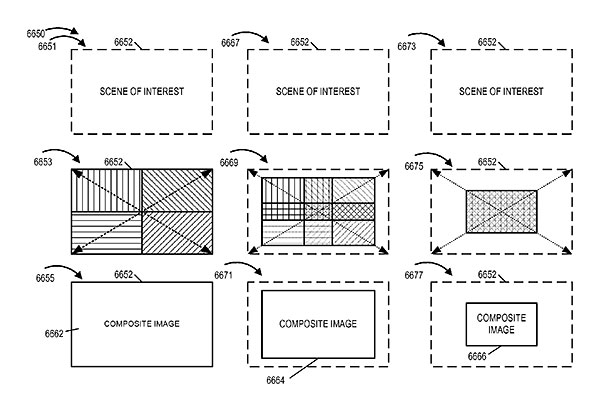

RL: Well, this is what it does: We move the mirrors so that when we take a picture, let's just say a picture at 35mm, we use the 35mm modules, but we also use the 70mm modules. And we move the mirror, so the 70mm modules -- four of them together -- capture the entire field of view of the 35mm module. So that we get four times the resolution.

DE: Got it. So you're taking pictures with both the 35mm and the 70mm at the same time, at the wide focal length?

RL: That's correct. So a 35mm picture is taken with 35mm modules and 70mm modules [together].

DE: Ah, very interesting.

RL: And a 70mm picture is taken with 70mm modules and 150mm modules.

DE: Got it. And then when you get out to 150mm, it's just the 150mm modules.

RL: That is correct.

[Ed. Note: I think Rajiv misunderstood me here; it's clear from his comments below that the 70mm modules fire for 150mm shots as well; they just don't increase the resolution beyond the 13 megapixels of the basic sensors.]

DE: At that point, do you use small shifts? Can you do sub-pixel shifts to increase resolution at that point, or...?

RL: No. Our sensor is a 13 megapixel, so... You know, if you have a 13-megapixel sensor, the 150mm pictures will be 13 megapixels.

DE: Yeah, got it. So 13-megapixels is actually plenty.

RL: But the pictures at 35mm and 70mm will be 52 megapixels.

DE: Oh really? Okay, so they are actually that large. And that maybe gets into another question I had relative to the multiple focal lengths and zooming. I think Dave mentioned the camera having true optical zoom, or that might have been you, Rajiv. With the multiple focal lengths, do you basically use that higher resolution data to interpolate, to produce the effect of a smooth optical zoom?

RL: Actually not. So a discrete optical zoom is almost completely optical, because we have modules at 35mm, 70mm, and 150mm. But the way we do continuous zoom is actually different than how most cameras do continuous zoom. We do not move lens elements inside a lens in respect to each other to change the focal length. Our solution is more of a system solution where, for instance, for a 50mm frame we move the 70mm lenses so they always stay within the field of view, and all the light they capture contributes to making the picture. That's kind of hard to explain over the phone without a diagram. [laughs]

DE: Got it.

RL: But we do offer continuous zoom ability, from 35mm to 150mm, and it's an optical zoom. Not in the traditional sense, but it is not simply cropping the picture.

[Ed. Note: It sounds like what happens for a photo with 50mm-equivalent angular coverage is that the mirrors for the various modules in use shift the center of view for each module to provide maximum overlap within the 50mm coverage area. The 35mm modules probably don't need any shifts, because their fields of view would all fully cover the 50mm area anyway. The 70mm modules will be aimed such that the edges of their fields of view would be along the edges of the frame, and they'd overlap significantly in the central parts of the frame.]

DE: Right. So in all of the exposures, is there some interval of time involved? Like with the 70mm unit modules at 35mm coverage, are you taking multiple shots with them to get full coverage of the 35mm field of view -- is that correct?

RL: That's right. So we divide the field of view into four quarters, and each one of the four 70mm lenses takes one quarter [of the field of view for the 35mm optics].

DE: Okay. So there's no sequence of exposures, it's simultaneous. Got it.

RL: They all fire in tandem. All the pictures at the same time.

DE: So when you're shooting at 35mm, you've got all of the 35mm modules [taking part in the exposure] as well. And so they're also firing -- is that basically helping you reduce the noise levels?

RL: Absolutely. So for any given picture, 10 modules fire out of the 16, depending on what zoom level you are at. For 35mm pictures, five 35mm will fire, and five 70mm will fire.

DE: Okay, and even at the 150mm end, are the 70's firing there as well?

RL: Yes.

[Ed. Note: While they don't contribute to increased resolution for 150mm shots, the 70mm modules help with image noise and subject geometry.]

DE: Ah, interesting.

RL: 10 of them fire.

[Ed. Note: Interesting; there are six 150mm modules though; I'm not sure what the extra one does when shooting at 150mm.]

DE: Got it. So again, even though the resolution is lower, that's going to help you with noise levels at the high end.

RL: Absolutely. Noise distribution with frequencies; at the lower frequency, we can still reduce noise by firing the 70's.

DE: Yeah. Very interesting. I guess one question in my mind is how you achieve the alignment. Is there some sort of a closed-loop process involving the multiple mirrors to get things in close alignment? Or maybe there's an alignment using the mirrors, and there's a digital correlation that's performed? How do you achieve alignment between the separate modules?

RL: That's a good question. So if you see the four quarters of a 35mm field of view picture that are taken with 70mm lenses, they are from different entrance pupils, so different perspectives.

DE: Correct.

RL: Simply putting them together is not going to result in an undistorted picture.

DE: Right, that was actually one of my next questions.

RL: We also have the five 35mm pictures that help us define the geometry of the scene, capture the depth of objects, and stuff like that. With that information, we can combine the 70mm pictures into a 52-megapixel picture. We do perspective correction, if you will.

DE: So the purpose of using the lower focal length modules is not just reducing noise, but it's also providing additional geometry information.

RL: That's right, for perspective correction, yes.

DE: Got it. It seems to me a little bit tricky, and I guess it's a general issue any time you've got more than one entrance pupil, but there's got to be some tricky things going on with occlusion within the subject, you know, parts that are visible to one module but not to the others. Is that something you actually have to deal with?

RL: Yes and no. We have tied many cameras [together], so we have pictures from various perspectives. If you have to change the picture, and generate a perspective that is in between the cameras, you have to deal with occlusion. If you always generate the final image from a perspective that you captured, then you don't have to deal with occlusion so much.

DE: Got it, got it. So...

RL: So if you originate, let's say there's a central camera from which you capture the image, and you want that perspective in the final image, you don't have to deal with occlusion.

DE: Got it.

RL: Or if you change the perspective to an in-between point, which is in between the cameras, then you have to deal with occlusion.

DE: I see. So you can kind of use one camera as the master, and based on what it can see, you're just taking contributions from the other cameras.

RL: Absolutely.

DE: Ah, very, very interesting. So the deflection that the tiltable mirrors produce -- I don't think I have a good picture of that, because the other thing that's in my mind is that any kind of manufacturing process, if you're trying to get four or five 150mm lenses precisely collimated, that's got to be virtually impossible. So I'm guessing that the tiltable mirrors are part of compensating for manufacturing differences. But how much actual deflection of the angle of view do the mirrors produce? Or what are they capable of producing?

RL: Well actually it's a very small deflection that they need to produce, and it doesn't have to be very accurate. If you think about it, the normal position of the mirrors is so that the 70mm pictures are centered in the frame of the 35mm picture, right? But all you have to do is move it from the center to one of the quadrants, right? So it's a very small deflection. And the second thing is that the accuracy required is not very high because you can always leave a little bit of an overlap, so that if the mirror is slightly off, it does not really matter.

DE: Got it. So when you assemble the final image, do you actually crop in very slightly? There's some edge pixels that may or may not be aligned, so you're taking the ones you know are good?

RL: Exactly, yes.

DE: Do you have any kind of a number for just how much the noise level is improved in the final images, over the individual modules? Is it the square root of 10, basically, because there are 10 cameras involved?

RL: If you take ten pictures in a naive way of doing things, you're collecting ten times more energy for one picture. But we actually do better than that, because we don't have to expose all the pictures the same amount.

DE: Oh!

RL: Normally you determine exposure by making sure that your pixels don't saturate, right?

DE: Yes.

RL: But what we can do is, since we have redundant information, we have nearly the same pictures from multiple sources, for a few of them we can over-expose them. So we can let the highlights blow out. We capture the dark areas at much higher SNR [signal to noise ratio], but the highlight information is available redundantly in other pictures, and anyway, it has a higher SNR than the darker areas.

DE: Got it, got it.

RL: So we do better than ten times, right, if we collect ten times the energy.

DE: Yeah, so it's sort of like single shot HDR almost, in terms of expanding the dynamic range, and therefore you have much less noise in the shadows because those areas get more exposure. You're taking them from a sensor that got more exposure.

RL: Exactly, right.

DE: Wow. So what does that net out to? I mean, you know, our readers are going to say, 'Okay, how many stops improvement, or something?' Can you reduce it to any kind of a simplistic number like that?

RL: About four stops.

DE: Wow. So this really is dramatically better than what a cell phone's going to do!

RL: Absolutely. I mean, that was the whole vision behind the company.

DE: Yeah. I guess I was very skeptical; when I hear you saying DSLR quality, I'm thinking, "Oh yeah, right, okay." But with the different exposures, I could see that you'd certainly have a very low noise level. Now when you get into a low light situation though, it's a matter of just purely how much light you can collect, and so I would think at that point, I guess you still have ten different looks at the area that you're capturing.

RL: That's right, so ten times the energy. But we do better than that there too. Because not all of our sensors have RGB Bayer patterns on them.

DE: Oh really? Oh, that's also very interesting.

RL: Only luminance information collects a lot more energy over a given exposure time, versus RGB patterns.

DE: Yeah. So one or more of the modules could be unfiltered, so it's not losing any light to the filtration.

RL: That's right. So low light performance goes up because of that. Because you don't need chrominance information from all of the sensors.

DE: Yeah. So if you were just taking multiple images covering the same area, if all the pixels were the same size, and the noise was Gaussian, its scale is the square root of the number of images. But then I guess at low light you're going to be exposing for the maximum that you can anyway. You want the most sensitivity anyway, probably, but you've got these other modules that don't have the Bayer pattern on them. How much light is typically lost in the Bayer pattern overall? It's got to be substantial.

RL: The red filter accepts about one-third of the spectrum, so does the blue and so does of the green.

DE: Yeah. So you're basically throwing away two-thirds of the light on average, across the picture. Although luminance follows the green more so, but really you're ultimately...

RL: From an energy point of view, you're throwing most of that away, right.

DE: Yeah, so basically if each pixel is only seeing a third of the spectrum, then you're throwing away two-thirds of the energy overall. Okay. So we're picking up a factor of three there. That's more than a stop that you're gaining, a stop and a half or whatever [Ed. Note: Numerically, a factor of 3 increase in light is a gain of 1.58 stops], just by not having filtration on that one sensor. So does that roughly four stop figure of merit extend to the low light case as well, compared to the basic sensors?

RL: Yeah, four to five stops for everything, basically.

DE: Wow! I guess my next question is, when can we get our hands on one? [laughs] This is very, very interesting.

DG: We certainly expect that you'll see products in the market next year with this technology in them.

DE: At some time during calendar year 2016?

DG: That's correct, yeah.

DE: So you've got these multiple view angles, and to some extent, there's some similarity with what Lytro is doing, I guess purely from the standpoint that you have multiple perspectives. How is what you're doing similar to Lytro, and how is it different? I understand that you have multiple sensors, and you've got more sensitivity, but when it comes to post-capture focusing and that kind of thing, or calculated bokeh, how does your technology compare to what Lytro is doing?

RL: Let me put it this way. We can do everything that Lytro does. We don't talk about it because we don't want our mission to be confused with Lytro's mission. We believe that nobody wakes up in the morning trying to refocus their pictures, but you may wake up in the morning worrying about how you're going to carry your equipment with you.

DE: Right, right.

RL: So our mission is making it compatible with a mobile device, but we can do everything that Lytro can do. We just do it differently. Lytro does the same thing, they capture different perspectives, so to speak, by using a microlens array -- which actually increases the size of the sensor, if you think about it. Because they need many, many pixels to capture one pixel [of output] resolution, so for any given resolution they'll end up with a huge sensor.

DE: Oh yeah, exactly.

RL: You end up with a huge sensor, so the size of the optics is usually proportional to the size of the sensor. So we can do everything they do, but the way they do it will result in a bigger camera for a given quality. The way we do it results in a much smaller camera for a given quality.

DE: Right. You also can have much more parallax; there's a much greater spread between your individual camera modules than just the width of their sensor.

RL: That is correct. Our synthetic aperture is a lot larger.

DE: Synthetic aperture, thank you, that's the word. It also occurs to me that you have this large synthetic aperture, you have a multiple stereo vision system. Presumably, that can be used to help with autofocus as well?

RL: Absolutely. So the way we autofocus is by doing an exact depth estimate of where you want to go. It's a one-step autofocus. We know the depth, you focus.

DE: Right. And so you can also refocus post-capture, I guess. The individual camera modules, are they fixed focus, or are they variable focus?

RL: No, they're fixed-focus, but the individual camera modules are small, so they produce very large depth of field images. You can focus anywhere within that depth of field, right? Basically anywhere within that.

DE: Got it. So ultimately, the problem is more how to produce out of focus and shallow depth of field, than it is getting enough depth of field.

RL: That's right. To get large depth of field is easy. To get shallow depth of field is harder. [laughs]

DE: Yeah. Okay, so you can refocus, you can choose just how much depth of field you want or don't want.

RL: And what bokeh you want.

DE: And what bokeh, you can choose. So in your processing, you can kind of dial in your own kernel for what the bokeh should look like?

RL: Absolutely, yes. We can produce a Gaussian blur.

DE: Oh, that's very nice, because even large aperture lenses, unless you have an apodization filter...

RL: The fixed bokeh, right?

DE: Yeah, and even with an apodization filter, you're limited to whatever it might be; maybe four stops or so brightness for a soft edge. So strong highlights still have hard edges on them.

RL: That's correct. So we can produce something that has no hard edges whatsoever. It's a very gentle Gaussian blur.

(Image by Paul Harcourt Davies, see his article about bokeh for more on the topic.)

DE: Wow, people will love that! So it sounds like so far you're kind of describing the perfect camera, except I guess that if you want to go longer than 150mm or something, you can't, or wider than 35mm.

RL: That's not a limitation of the technology. That's just what, you know...

DE: Oh yeah, that's just where you are right now. I just meant the current array. I mean, obviously if you wanted, you could put an 18mm, or 12mm or something in there, or a 300mm.

RL: On our roadmap, we want to build a 600mm lens the size of your iPad.

DE: That would be intriguing! [laughs] What about image stabilization? I was wondering if the mirrors helped with that, but it sounds like their total deflection... Well, but they can deflect up to half the field of view, for example with a 70mm, they can deflect that essentially half of the frame width, to be able to get to the corners of the 35mm field of view. Can you use the mirrors for image stabilization?

RL: We could, but we're not using it, because to use it for IS, you'd need to have two degrees of freedom in the way you move the mirror. We only move it around one axis.

DE: Oh, I see. So basically the 70mm lenses, when they're covering the 35mm field of view, they basically are just moving on a diagonal.

RL: And the 150mm [lenses], when they're covering a 70mm field of view, are also moving along just a diagonal.

DE: Yeah, I see, okay. That's interesting, got it.

RL: So our image stabilization story is completely unique and different, but we will get back with you in a month or two.

DE: Oh, okay. You're waiting for the patents to be issued or whatever, or to be filed. [laughs]

RL: Yes.

DE: Got it. We'll be very interested in hearing back, because I think IS is one of the technologies that has some of the strongest end-user benefit. So it sounds like we're sort of describing these ideal cameras now. What are the limitations? What are the trade-offs? Presumably there's going to be some need for full-frame DSLR still.

RL: Well, the die-hards will use it, and they'll be the last ones to switch. But yeah, in ten years' time I can tell you, there will be no more full-frame DSLR's. Every camera will be built like this. There's no need to build them like that anymore.

DE: Ah, interesting. That'll make a good quote, I think I'll use it for our headline: "No More DSLR's in 10 Years, says Rajiv Loria".

RL: Yeah, I'm ready to stand behind that quote. I'll keep it. [laughs]

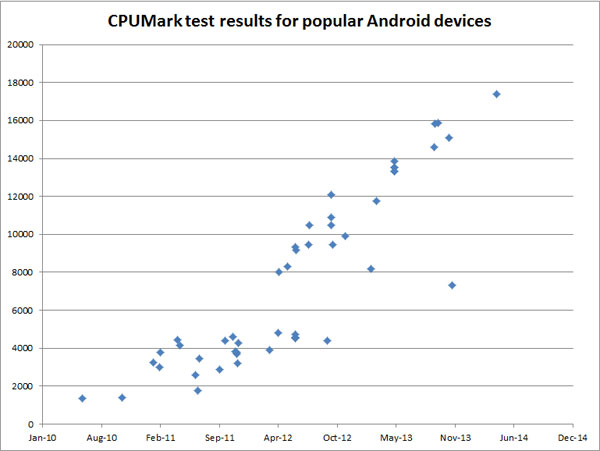

DE: Wow. So we're doing a lot of image processing here. How much processing power does it take, relative to what's available in typical cell phones? And I don't know, do cell phones have separate image processing sub-processors, or what's it take to stitch all this together?

RL: Cell phones actually have incredibly powerful processors right now. The problem with cell phones is not the availability of MIPS [Ed. Note: millions of instructions per second], it's right now more along the lines of MIPS per watt.

DE: Yeah, I was going to say power.

RL: That much is power, not MIPS. But that is becoming more and more available in the future, and also some of our algorithms will consume much less power once they go into hardware acceleration mode. Rather than being implemented on a general purpose processor, once the algorithms are implemented directly in hardware, it consumes far less power. So if you put in hardware accelerators, then the power consumption will be significantly less. MIPS is not that much of an issue. It's more...

DE: Yeah, it's power. It basically comes down to battery life.

RL: Exactly.

DE: And of course, this only needs to burn all that power while it's actually processing an image. I mean, the viewfinder function isn't going to use any more power than a viewfinder function on a current camera phone.

RL: Absolutely right.

DE: So with the type of processors that are available, how long does it take to crunch the data, to produce the image? I know there's a wide range of processors out there, but say the iPhone 6 as an example.

RL: Well, let me put it this way: There's different kinds of processing you can do. Some of the more heavy-duty processing that changes depth of field, especially shallow depth of field, you might want to plug the device in, or do it on a desktop. The pictures get automatically uploaded in this case. [Ed. Note: It sounds like part of their offering will be a cloud service for processing images generated by cameras in their manufacturing partners' devices.] But a lot of the processing, if you don't want to change depth of field and stuff, can be done on the device. And it really depends on what device. The modern cell phone has an incredibly powerful processor, let's put it this way.

DE: Got it.

RL: If you've seen Qualcomm's Snapdragon processor, it has some incredible number of MIPS available to you. As I said, the only caution there is, it's MIPS per watt that's important.

DE: Right. You can have as many MIPS as you want, but the phone might melt, and your battery life will be ten minutes.

RL: So those are the more important issues today than even the availability of MIPS. Once the algorithms are in hardware, those become much less of an issue.

DE: Got it. And the file format, what are the files like? How big are the files that you need to store in order to extract that resolution and have the capability to refocus, et cetera?

RL: Well, if you want the max resolution, of course then you've got to be generating ten images, right?

DE: Oh, so it's really like 130 megapixels of data, basically.

RL: Well, no, it's not. Yes and no. That's raw data, but since a lot of these pictures are just slightly different perspectives, they are very easily compressible. You can do lots of compression on them because they're very heavily correlated.

DE: Right. It's a differential encoding, essentially.

RL: Exactly, yes. Or you could code it similar to the way MPEG codes the motion compensation, except in this case it's not motion compensation, it's perspective compensation.

DE: Yeah, exactly. So you have the base image, and then you're basically just doing deltas off of it.

RL: Exactly.

DE: Got it. Well I think I've covered all my questions, but this has been really, really fascinating. I think there's a lot of interest, and this kind of information is what we do, so I really appreciate the time you spent with us.

RL: We are definitely very excited about it!

DE: I have to say that we are too; very cool!

Editorial Musings:

OK, that sounds like pretty much the ideal camera, right? There's always a catch, though, and I can think of at least a couple of potential ones. Will they be significant ones? We'll have to see once testable samples are available, but here some thoughts I have:

Lens Quality

First, what about lens quality? This was one thing that struck me, after editing and reviewing all of the above. Interchangeable-lens (ILC) bodies and premium pocket cameras generally come with pretty decent lenses on them, and even kit lenses on ILCs do surprisingly well when stopped down a bit. Can tiny lenses of the sort needed for the Light camera modules be made to similar quality standards? You can certainly correct for aberrations in firmware, but what about the basic optical quality?

Of course, one counterargument to the above is that Light only has to make fixed focal length prime lenses, which are a lot easier to build to a given standard of quality than zoom lenses. But then there's the question of how well they can interpolate and combine data to turn a combination of (for instance) 35 and 70mm lenses into an image with a 50mm equivalent field of view. While the system can produce 52 megapixel images at 35 and 70mm, it seems that in-between focal lengths would produce something less, and at 150mm, resolution is back down to 13 megapixels. Certainly, 13 good megapixels are quite a bit more than enough for most consumer use cases, but that still leaves open the question of how good the basic optical quality is, and just how well the images can be aligned and subject data extracted from them.

Also, when lining up the images to extract the image information, there's bound to be sub-pixel variation that can't be compensated for entirely in the firmware. (I'd think especially in the case of small rotations.) These considerations make me wonder if many small lens/sensor assemblies can really deliver better optical quality than one large one.

Depth of Field

Then there's the matter of the lenses being hyperfocal, meaning that everything from some fairly close distance to infinity is "in focus." In the computer rendering above, the closest-focus distance is given as 40 centimeters for the 70mm-equivalent lenses and 10 centimeters for the 35mm ones. Assuming that's correct, I have a little concern about just how much resolution is actually available.

I was going to include a rather lengthy discussion of depth of field here, with calculations to determine just how much blur Light had to be allowing for, if the 40cm minimum-focus distance mentioned above was accurate. In the end, I decided that (a) they may be using a smaller image sensor, relative to the likes of current top-line models like the iPhone 6/6 Plus, (b) they are likely doing some image processing to compensate for out-of-focus blur at both the near and far ends of the lens' range, and (c) I'd have to make too many assumptions about the details of sensor size and possible processing to come to any firm conclusion. If they're using a significantly smaller sensor, it's going to have a pretty small pixel pitch, and that's going to mean a good bit higher noise at the sensor level. So the four stops of promised noise improvement might be built on a lower starting point than some phones currently on the market.

Autofocus

The Light technology is capable of producing more information about subject distance than perhaps anything to date, but we've been finding as part of a recent project that even basic AF tracking isn't nearly as straightforward as you might think, with higher-end DSLRs still maintaining an edge over even recent mirrorless offerings, despite all the advancements the latter have seen in recent years. (We think it'll be a little while yet before the newcomers can best cameras like the Canon 1Dx and Nikon D4s for sports shooting.) Just having distance information available doesn't guarantee that you'll be able track subjects quickly and effectively with it.

Ultimately, we'll just have to wait for a shootable sample to arrive at any firm conclusions. While I do have some misgivings about just how good Light's cameras will be, I do expect that they'll in fact provide a dramatic step beyond anything we've seen to date, particularly with this level of focal length flexibility.

Bottom line, there's always a lot of distance between a concept and the ultimate products, with a lot of pitfalls along the way, but the concepts here all seem very sound, and eminently achievable with current technology. And the Light team has an incredible pedigree of very successful tech startups that brought revolutionary technology to market. I don't think I quite agree with Rajiv that there won't be any full-frame DSLRs in 10 years time, but it seems clear that Light's technology could revolutionize smartphone photography, and further squeeze traditional cameras in the process.

Stay tuned for further details as they become available; we're really looking forward to getting a camera based on Light's tech into the lab and out in the field!