Patent roundup: a conversation with Dave Etchells about recent sensor innovations

posted Sunday, December 11, 2016 at 6:00 AM EST

Over the past month, Canon, Nikon and Tamron have all announced patents with potentially profound implications for digital photography. We asked Imaging Resource founder and Editor-in-chief Dave Etchells to walk us through some of the key concepts involved and share some of his thoughts on their import.

Please note, our analysis of the patent documents relies a lot on machine-translated text from Japanese to English.

1. Nikon patent 2016-192645, "Focus adjusting device and imaging device"

AA: Earlier in November, Nikon's patent application was picked up on NikonRumors, and reported as "a two-layers sensor with phase detection AF in one layer and contrast based AF in the other layer". What do you see as noteworthy in this patent?

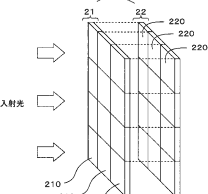

DE: Actually the first thing I noticed was that it's not showing a combination of phase-detect and contrast-detect layers, but rather two phase-detect layers, one over the other. And it's very clever, what they're doing; it depends on a translucent organic sensor layer. The pixels are split in half, as is the case with Canon's Dual-Pixel AF technology, but here they have two layers like that. On one layer they're split in half horizontally, on the other they're split in half vertically. Even without reading the text, the illustrations immediately imply two phase-detect layers. Even more interesting and particularly clever about it, the organic layer is going to be semi-transparent. It's going to pass some wavelengths of light onto the silicon sensor below it.

AA: Is that similar to how Foveon works, passing light to different layers beneath?

DE: Somewhat, but Foveon was using an inherent characteristic of silicon, that different wavelengths of light penetrate the silicon more or less deeply: blue light (short wavlenghts) is absorbed very quickly, and dumps most its energy close to the surface. Green penetrates a bit further and red goes the furthest of all. So Foveon, within the silicon, has three different layers in each pixel. Having the three colors all aligned gives them higher resolution than conventional striped sensors, but Foveon has problems with noise, and thus limited high-ISO performance. The top part of a Foveon sensor absorbs a lot of blue, but also some green and some red. The next layer down, the blue is largely gone, but it still has green and red passing through it, so it's going to absorb quite a bit of green but also a modest amount of red. It takes a lot of processing to untangle all that and extract the pure colors. In the process, you're subtracting noisy data sets from each other, so the signal to noise ratio gets worse.

[For the Nikon patent] The interesting and clever thing in what Nikon's doing is that if you look at the color labels on the left-hand grid, the pixels say 'Magenta', 'Yellow', 'Cyan' and 'Magenta'. Those are the complementary colors to Red, Green and Blue. Magenta is basically negative-Green. Magenta light is composed of Red and Blue. So the top layer, the organic layer, is going to absorb Red and Blue in that pixel, and then what passes through is just the conventional Green signal. So rather than having a green filter and just throwing away the red and blue light, they've got a sensor on top which is gobbling up and recording the red and blue, and then passing on the green to the layer below. So they're making use of much more of the color spectrum than a conventional RGB-striped sensor would.

AA: So what are the potential advantages of this layout?

DE: The main advantage is that it's on-chip phase detection, and every phase detect element is a cross-type sensor as opposed to only horizontal or vertical; you would be able to have phase detect autofocus in live view for SLR cameras.

(It's a bit beyond the scope of this article, but if you'd like to review how phase detection works: here's a great article on the subject.)

Also, you would automatically get really great greyscale images, because you are in fact capturing all the light at each pixel. Assuming that the two sensors were equal sensitivity and had equal photon efficiency (an admittedly big assumption), if you add together the signal from the green and magenta, you've got white. You add together Blue and Yellow and you've got white. In a conventional sensor you have to demosaic it, and then convert to black and white; here you've got raw luminance data from each pixel.

2. Canon patent 2016-197663, "Patent of the curved sensor which suppresses the Canon vignette"

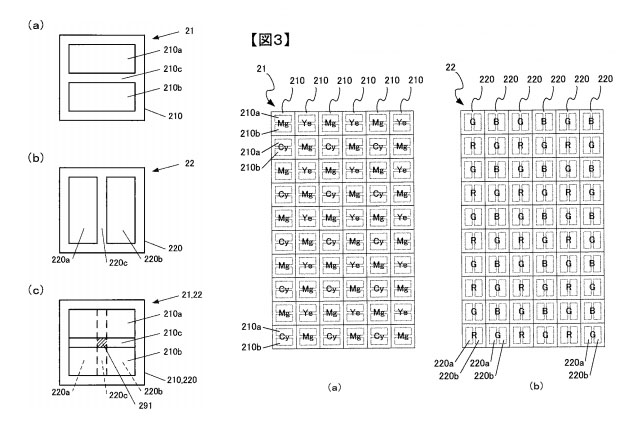

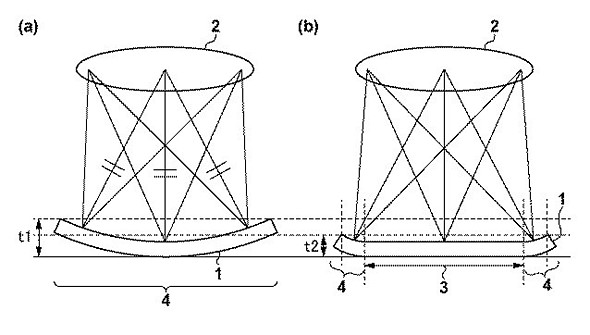

AA: Canon's first curved sensor announcement was reported back in October, with some truly sci-fi connotations, like a sensor that could curve dynamically at the time of image capture by using a magnet to stretch it.

DE: Yeah; I haven't read the patent that talks about using a magnet to deform the sensor's shape, so I don't really have an idea what they're getting at there. With this most recent patent (the illustrations above) it sounds like it's a way to kick up the edges a little bit, so that you don't get as much light falloff, but without really changing the distance to the lens significantly so the object would still be in focus. It seems like it's purely a way to take care of the light falloff without really changing the lens design. A curved sensor could relax a lot of optical constraints, by giving the lens designers a break on curvature of field. The problem is, all current camera lenses are designed for flat sensors, so a curved sensor wouldn't work with them.

(Aha! - As I said that, I just realized the point of the magnetically-actuated curved sensor: It could lie flat for use with legacy lenses, but then be bent into a curved shape for use with new lenses that had been designed to take advantage of a curved sensor. Pretty cool idea!)

The reason that light falloff is such a big issue with silicon sensors, is that they generally have some significant three-dimensional structure to them. Now back-side illumination helps that a lot, because you don't have as much circuitry on the back side of the wafer, to get in the way of the incoming light. If you actually look at a scanning electron micrograph of a conventional front-surface image sensor, the actual light-sensing surface is down at the bottom of a hole. And so with a structure like that, when you get to the edges of the image circle, you're not getting all the light down to the sensor any more because any oblique rays are being shadowed. (This is also the big deal with telecentric lenses, or more specifically, ones with exit-pupil telecentricity; the light striking the sensor surface hits straight-on, so there's no shadowing. This isn't easy to do, though; such lenses tend to be bigger and more expensive than more conventional designs.)

So what I think this latest curved-sensor patent might be doing is kicking up just the edges of the sensor, so you can avoid some of the worst of the shadowing, but without significantly changing the lens-sensor distance. (I do wonder if I've got the right interpretation here, though; it seems that enough angle to make a noticeable difference in shadowing would be changing the sensor-lens distance too much. Any readers with other ideas about what might be going on, please leave your thoughts in the comment section below!)

3. Tamron announcement: "Low gain-noise, wide dynamic-range CMOS image sensor"

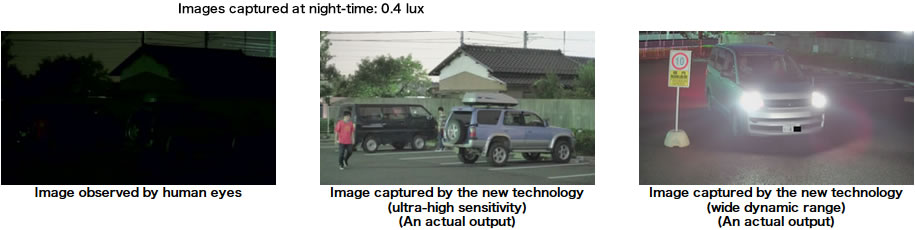

AA: Most recently, Tamron issued a press release that it had developed a technology that allowed for images to be created "far exceeding that of human vision, with critical consistency established between ultra-high sensitivity and wide dynamic range." The announcement is a bit vague on details, but it does offer that the sensor is receptive to "a range of brightness in excess of 140 dB (a lightness-and-darkness difference of 10 million times)".

DE: That 140 dB number was so astonishing that I thought it'd be interesting to take a look at just what the numbers mean, and see if I could relate them to the realities of current sensors. This is probably only for the uber-geeks, and I probably dropped a digit here or there (hoping the readers will correct me!), but here's what I came up with:

If I've got the right number of zeroes there, 140db is around 23 1/4 stops, a ratio of 10,000,000:1. The problem is, Tamron doesn't state what the two ends of the range are. The comparison images above are a bit unfair to the human eye, as a light level of 0.4 lux is about -2.6 EV, which Wikipedia tells us is on the order of the light from a full moon. (Perhaps they were assuming that the human seeing the image shown above left had just walked from bright sunlight into moonlight-levels?)

So what ISO level would the shot above correspond to? We can make an estimate of minimum shutter speed by noting that image has a person walking in it, and they're captured without motion blur, at least at the very low resolution of the sample image.

EV 0 corresponds to an exposure of 1 second with a f/1.0 lens at ISO 100. So EV -3 (rounding up slightly) would need an exposure of 8 seconds at f/1.0, or 32 seconds at f/2.0. So let's assume an f/2.0 lens, and give them the benefit of the doubt and say that they could get away with a 1/8 second exposure to capture moving person at the resolution shown. So what ISO would that be? Well, we'd go from 32 seconds at ISO 100 to 1/8 second at ISO "X". That's a factor of 256 or 8 stops, saying we'd need ISO 25,600. They don't tell us how big a sensor they're talking about, but that's well within the range of current technology, especially at resolutions as low as they've shown.

So at the low end of the brightness range, it seems we're at least within reach of conventional sensor technology; with that set, where does it put the high end?

Well, 23 stops up from EV -3 would be EV 21, which is about five stops brighter than direct sunlight on snow. Huh? That doesn't seem it could be right (there's zero need for it), but then we have to consider what they might mean by "Dynamic Range". Technically, the "noise floor" of a system counts as the lower end of its dynamic range. So the low end of a sensor's dynamic range could be viewed as the darkest level at which the background noise is as "bright" as the image. Compared to the image shown above, that's probably a good 4 stops darker, probably more.

So it seems that they're saying that they have a sensor that can capture clear color video under full-moon light levels and go all the way to full, bright sunlight, with the same exposure settings. That is indeed something new, in that any existing camera sensors would either completely saturate at the bright end or be completely black at the dark end.

DxO measures the dynamic range of image sensors, and the highest-rated camera they've tested so far is the Nikon D810, with a dynamic range of 14.8 EV. So the Tamron sensor is or almost 350x better!

AA: Can we expect to see this technology rolling into consumer level cameras? Tamron has a big foothold in the security camera market, which seems to be where this technology makes the most sense.

DE: It's certainly very interesting. At a system level, this basically corresponds to having a 24-bit A/D converter measuring the sensor signals, vs the 10-16 bit ones we have today. You'd just set things up so the sensor would give a good exposure (that is, you'd fill the pixels) under daylight conditions, and just digitize it so finely that you could "see" all the way into -6 EV shadows.

The catch is that you probably couldn't read the data off a chip like that very quickly, meaning that you'd be restricted to pretty low resolutions. It's also aimed at a very different use-case (security cameras) than we're interested in as photographers. We don't need to go from full sun to moonlight without changing our exposure settings; we just change the shutter speed, stop down, etc. What could be interesting though, is the extent to which higher-resolution A/D conversion could significantly increase dynamic range in photographic applications. It'd certainly be nice to just expose for the highlights, but be able to pull perfectly clean detail out of even very dark shadows. The question is, though, would we be happy with either a resolution of 1 megapixel or a "burst" rate of one shot per second? Or a 12 megapixel sensor that burned 5x the power? I think those areas are where the tradeoffs are, but I also think that we're going to see cameras with higher dynamic range in the future than we have now.

Ultimately, it will come down to what the market wants: Currently, the market pressure is all in the direction of high speed: Faster, longer bursts, faster, more sophisticated AF, etc. I expect we're going to see a lot more development in that direction before dynamic range becomes a big enough differentiator for manufacturers to move in that direction.

AA: Thanks for your thoughts, Dave!

Update, 12/13/16, 13:16 - Um... Duh! Thanks to alert reader Chuck Westfall (yes, that Chuck Westfall ;-) for reminding me that f/2.0 is two stops, not one stop as I'd assumed in my original calculations. (One stop up would be f/1.4) With that error corrected, the equivalent ISO for the Tamron sensor would be 25,600, vs 12,800. Thanks, Chuck!