Nikon Q&A @ CP+ 2016: Autofocus mysteries, OPTIA lens design, and the new DL-series compacts

posted Monday, March 28, 2016 at 5:59 AM EST

Imaging Resource founder and publisher Dave Etchells was fortunate to be able to sit down recently with several senior executives from the Imaging Business Unit at Nikon Corp., which is responsible for producing the company's cameras and lenses. On hand to answer Dave's questions were Tetsuya Yamamoto, Corporate Vice President and Sector Manager, Development Sector; Hiroyuki Ikegami, Department Manager, Marketing Department, Marketing Sector; Naoki Kitaoka, Group Manager, Marketing Group 1, Marketing Department, Marketing Sector; and Masahiko Inoue, Group Manager, Marketing Group 2, Marketing Department, Marketing Sector.

Topics for discussion included the rationale behind the design of the phase-detection autofocus systems used in the company's DSLRs -- including the advances made in the recent Nikon D5 and D500 in this area -- as well as further insight into the company's OPTIA lens measurement and design system, and the company's new DL-series cameras, its first fixed-lens models based around the increasingly-popular 1-inch sensor size. Questions were primarily answered by Yamamoto-san, although with contributions from the other gentlemen at various points in the interview. Given the difficulty of noting the respondents with certainty while transcribing the interview, all answers have simply been attributed to Nikon throughout.

Dave Etchells/Imaging Resource: As is usually the case, the D5 and D500 share a common AF system. This gives almost complete frame coverage on the D500, but considerably less on the full-frame D5. I would think that pro shooters would greatly appreciate broader AF coverage, but no SLR manufacturer offers it. Is it simply a matter of economies of scale that result in shared AF systems like this, or are there other, more fundamental reasons for not having dedicated full-frame AF systems that cover more of the frame area?

Nikon: No, actually, it is not about scale or economy. We actually think that it is optimal for each camera. (Each means the Nikon D5 and D500.) First of all, optically, the lens mount is the same for both the D500 and D5, for the AF coverage area. So when we think about the maximum AF area, the mount structure actually determines the vertical and horizontal coverage of the AF system. In this situation, DX is using the center of the full mount, so that's why there's full coverage as you said. And that's why we provide the feature in the D5, where we can use the full AF area in a DX crop. Of course, the lens' field of view will vary, but that is for the users to choose and adjust.

DE: So the field of view of the lens will affect the angle of the incident rays on the sensor. Yes.

Nikon: But the AF coverage has been improved versus D4.

DE: What percentage larger, how much wider?

Nikon: Thirty percent.

DE: Oh. Yeah, big. That's a lot. I've not paid enough attention; I'm too busy flying to Japan and everything.

Nikon: Me too. <laughter>

[Ed. Note: Another "aha" moment for me in an interview. I knew that phase-detect AF required light rays striking the sensor from a minimum range of angles; that's why PDAF doesn't work at small lens apertures. I'd never connected the dots, though, to realize that the geometry of the lens mount itself would restrict the possible angles of incidence, as you get towards the edges of the frame. It makes complete sense, once you think about it.]

DE: Okay. So more on autofocus, about cross-type AF points. It seems that one of the big breakthroughs in the Multi-CAM 20k AF sensor is the huge increase in the number of cross-type AF points. 99 points are cross-type, up from only 15 in the Multi-CAM 3500. What has been the limitation in the past to offering more cross-type points, and how was it overcome in the new sensor?

Nikon: Well, the big, dramatic improvements this time are actually two points. One is that we changed the AF sensor -- it's new. And the other thing is that we have a DSP (digital signal processor), a new circuit, so that we can do high-speed processing after we increased the number of points.

DE: So the limitation in the total number of points was just DSP speed?

Nikon: Oh, and the older sensor too. Both.

DE: Yeah, so a new sensor and also... But you couldn't have used this sensor with so many points with the old DSP.

Nikon: We didn't even have a DSP in the past.

DE: Oh, really? Wow, so it was just a conventional CPU doing the AF before. That must be a huge boost in capability!

[Ed. Note: This was interesting; I'd never known what the hangup was to having more AF points. If I'd thought about it at all, I probably would have said that there was some limitation in fabricating the AF sensor. Lo and behold, it comes down to processing power. Depending on how many pixels are involved in each point, I can see that it could indeed take quite a bit of processing to run the correlation algorithm for each.]

Nikon: When you go back and think about the purpose of increasing the number of AF points, what we would be doing this for, it's actually because we want to capture moving subjects better with higher speed. But when it's done at higher speed, the amount of light gathered by the AF sensor becomes smaller, because it is operating faster. To offset that, we have a new mirror box structure. Also, the shutter has been improved as well so that we can collect light more within a shorter time frame.

DE: So the faster mirror effectively increases the exposure time of the AF sensor.

Nikon: Yes.

DE: Also, a new mirror box design lets you get more light, because you can have larger optics on the AF array.

[Ed. Note: Once again, very interesting. As users, we want viewfinder blackout to be as short as possible, to help track moving subjects, especially in continuous shooting mode. It turns out it's equally important for the camera's AF system, because the AF sensor only gets to "see" the subject when you do. (That is, when the mirror is fully in its down position). The faster the mirror and the shorter the blackout time, the longer the AF sensor has to collect light, so the more sensitive it can be. The other aspect, that I only touched on in my comment above, is that the redesigned mirror box makes room for larger AF-sensor optics, again improving light collection.]

Corporate Vice President

Sector Manager

Development Sector

Imaging Business Unit

Nikon Corporation

DE: Previously it seemed there was always a limitation in how many cross-type points. Was that limitation just another instance of a limitation in the total number of AF points you could handle? Or is there something about the cross-type points and the design of the sensor that made it difficult to have more cross-type?

Nikon: The total number of points would be the biggest reason. We also have to improve the autofocus algorithm as well because when you increase the total number of autofocus points, you increase the likelihood of erroneous focus readings as well. And that also needs to be adjusted.

DE: Ah, more points and then more likelihood of error. Yes.

Nikon: What the improved algorithm actually does is, it looks at not only one point or multiple points, but it looks at the whole area to adjust the center so that we have an accurate focus. We call this the auto-area. In other words, once you capture a subject which is moving, you continue to track it. It does not let go automatically.

DE: So with the very large number of points now, you create almost a depth map of the subject. Then you can find the outline of where the subject is.

Nikon: Yes. It's like a collective depth map.

DE: So, for every autofocus cycle, do you look at the depth map of the entire area?

Department Manager

Marketing Department

Marketing Sector

Imaging Business Unit

Nikon Corporation

Nikon: Yes, you make one, and then you continue to revise it. So yes, it does look at it every time.

DE: The whole area every time. Wow.

Nikon: That is only achieved by having 153 points, the new DSP, and the algorithm together.

DE: Yes. A tremendous amount of processing, not only to create the depth map but then to do object recognition and boundary calculation on that.

Nikon: Yes.

[Ed. Note: OK, this is really fascinating! (I know, you're probably tired of me saying "this is interesting" every few sentences, but this time I mean it! ;-) First, the main reason for so few cross-type points in the past has simply been that manufacturers were limited in the total number of AF points the processors could handle. Given the choice between increasing the number of horizontal-only points -- which worked most of the time -- or making more cross-type points that would work all of the time (in terms of vertical vs horizontal subject detail), they generally opted to have more non-cross-type points, covering a larger area, or the same area with better lateral resolution. This makes sense to me. I'd always assumed that there was something special about the vertically-oriented AF points that made them more difficult to implement, but it turns out it was just a basic engineering trade-off decision of how to allocate the amount of AF processing available.

The second intriguing part here is just how sophisticated the new AF system is. With so many points, the system can basically create a "depth map" of the image at fairly high resolution (at least in terms of AF systems), letting it see the subject as a collection of objects at various distances, rather than just distance data for a few somewhat isolated points. This *should* greatly help AF subject tracking, because subjects will appear as blobs of points more or less at the same distance from the camera moving around the frame. Depending on just how fast the AF processor is and how good Nikon's algorithms are, they can identify the boundaries of objects, figure out where the approximate center or other point of most important distance information is located, and follow objects as they move around the frame, recognizing them as objects, rather than just a field of discrete points. Of course, the devil is in the details, and simply having the data available doesn't mean that the processor and AF algorithms are going to be able to track subjects reliably. On the other hand, Nikon has many years of experience with AF algorithms, so I'm very optimistic about what this will mean for the D5 and D500's AF tracking ability.]

Group Manager

Marketing Group 1

Marketing Department

Marketing Sector

Imaging Business Unit

Nikon Corporation

DE: Nikon has had fairly high resolution RGB sensors for exposure determination for a while now, and has used them to good advantage to help the AF system track subjects. [Ed. Note: They've used RGB exposure sensor data to help identify objects on the frame, helping to compensate for the relatively low "resolution" of the AF system's depth information.] Has the dramatic increase in AF points led to any significant changes in the interaction between the RGB and AF sensors? Or is it more an incremental refinement?

Nikon: The methodology is the same, but the performance interaction between the RGB and the AF sensors have improved dramatically. In order to analyze the AF, the sensor actually looks at various information including the color and face recognition, and comprehensively calculates everything.

DE: And so now that you have a higher resolution depth map, you can make a better match with the RGB information.

Nikon: Yes.

[Ed. Note: I mentioned above that the AF system could better identify the center of a subject, or "other point of most important distance information." This is what I was thinking of. The RGB exposure sensor has for some time been able to perform a certain level of face detection, but now that information is much refined, by its ability to associate a point cloud in the AF sensor's depth map with what the RGB sensor thinks is a face.]

DE: When I interviewed Gokyu-san and Yamamoto-san at CES, I realized when we were transcribing the interview that I was unclear on a couple of points about autofocus. We were talking about phase-detect versus contrast-detect in hybrid AF, and Gokyu-san said: "Regardless of which direction we take, we know that the key point which becomes important is the depth of focus. So we are working on both technologies, sensor phase detection and also the contrast autofocus plus alpha.' So then two questions. Did his reference to depth of focus refer to focus precision, or accuracy?

Of course now we have... Gokyu-san said something, we transcribed it, and then I describe it back to you, and then ask Yamamoto-san what Gokyu-san actually said. <laughter>

Group Manager

Marketing Group 2

Marketing Department

Marketing Sector

Imaging Business Unit

Nikon Corporation

Nikon: He did mention the depth, not accuracy.

DE: The depth of focus.

Nikon: But to acknowledge the depth, to recognize the depth.

DE: Okay. So maybe because we were talking about phase detect and contrast detect, and so he said the key point was recognizing the depth of focus.

Nikon: I think I said that.

DE: Oh, okay. My apologies.

Nikon: Maybe, because Gokyu-san would never say that, he's a marketing man.

DE: Oh, yes. I apologize. When our editors are transcribing, they can't always tell which person was speaking. Anyway, they just hear Miho-san speaking in English (Nikon's excellent translator). Then the contrast autofocus plus alpha; I'm wondering if we misheard the word. Did you say alpha, or maybe it was some other word?

Nikon: I said that, yes.

DE: You said that. So contrast plus alpha; what does the alpha mean?

Nikon: In contrast AF, you don't know the direction.

DE: Ah! OK, so So alpha refers to direction.

Nikon: Yes, it's a technique.

DE: Ah, that's good. I just didn't know what alpha referred to, so thank you!

Moving on to lenses, last year we saw a flood of new Nikkor lens announcements. I counted no less than seven, but maybe I missed one or two. The previous two years have been relatively sparse. What accounted for the sudden increase of activity, with so many lenses announced so quickly after such a relatively slow period before? Did Nikon increase the number of lens designers, or did the OPTIA wavefront-based lens design system account for the difference?

Nikon: It's not like we had a special program to accelerate the launch last year, it's not like we introduced a specific structure, or a new system, or anything like that. We were just putting together a launch plan, just according to the market trends and customer needs, and we went along with them. The pace is determined by the market. Then when something dramatically improves, like performance or something like that, maybe a new series of lenses launch one after another. That may be the case.

DE: This may sound funny, but I guess I wonder in years when you don't announce seven lenses, what are all the lens designers doing? <general laughter in the room>

Nikon: Oh, they don't have any idle time at all, believe me.

DE: So why, you know, two lenses, two lenses, then seven lenses?

Nikon: The engineering members are all in full utilization, actually. They're always busy working on the job of designing lenses. But you have to keep in mind that the lead time of a lens differs by the difficulty of designing it. So sometimes a lens can be developed in 2 years, sometimes 3.5. Then that kind of coincides to the launch timing, which is not even.

DE: Wow, that's very long, sometimes 3.5 years!

Nikon: Some very difficult ones, yes. For example, Phase Fresnel. That was a new technology, so it took a lot of time.

DE: Ah, so it was just a kind of coincidence that this was when they all came out of the pipe at the same time.

This wasn't in my prepared questions, but it makes me curious: When a lens takes longer, what is the process that's being done? Is it a matter of iterations, that you have to try something and then it doesn't work, so you try something else, it doesn't work, etc? What is it that takes more time on difficult lenses?

Nikon: Optics design would be one. Designing the optics would take longer, for one. A lot of time is consumed in the simulation of what the structure should be. I mean, the basic structure of how many lenses...

DE: How many elements, yes.

Nikon: ...and then the focal length, and all that. Actually, the standard of a lens is becoming higher and higher, compared to conventional film cameras, because we have more pixels, and the resolution is going up.

DE: So the design process is one of ... they will start in some direction and develop a preliminary design, see what the results are, and then they will change some things and try it again?

Nikon: When we do the manufacturing itself -- not the mass production, but when we actually design and make a camera [or lens]-- the engineering part is based on our plan. It's the simulation part where we do a lot of trial and error, which is done on the computer. But that's still also considered manufacturing, for us.

DE: It takes time to do a simulation, yes.

Nikon: The one that takes the most amount of time is giving birth to a new technology and the base R&D activity, as Yamamoto-san mentioned.

DE: Like Phase Frenel.

Nikon: For example, yes.

DE: The OPTIA system was announced a while ago, but it never occurred to me to ask before; what was the first lens designed with it, or at least the first one that was announced?

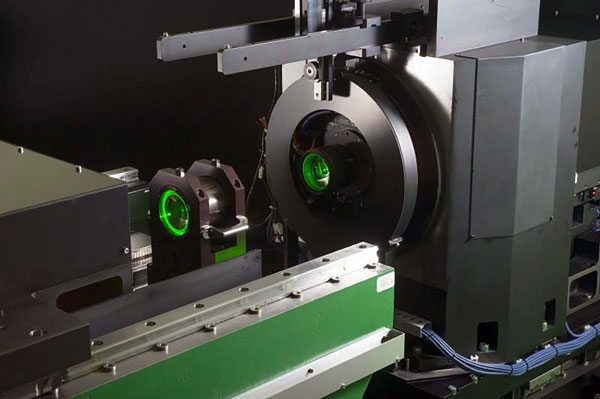

Nikon: It's hard to recall. Recent models are all through OPTIA. Actually, it's a device that measures all of the elements in the lens. It's not really a tool that we use to do the optical designing.

DE: Oh!

Nikon: It measures how the light waves reflect or penetrate, and then we simulate the combination of the lens elements, obviously. That's the designing part, but that's less relevant to OPTIA. The answer to your question is, the recent ones are all done through OPTIA. OPTIA has replaced the MTF measuring machine.

DE: Ah, yes, I see. So you had the old design software worked by ray tracing.

Nikon: Basically yes.

DE: So OPTIA makes the measurements, characterizes the elements, but now the design software is also different, to utilize that information in the simulation.

Nikon: Yes. It is also new. So the OPTIA looks at each element, one by one, the degree of bokeh and the aberrations. It looks at the light waves, basically. The design activity is to combine the elements in the best way.

DE: I said design, but I probably should have said that the simulation software is changed.

Nikon: OPTIA is a tool that allows us to obtain data for simulation. There's something that the ray trace can never do, and that is to see how the bokeh is for one lens, because we want some kind of a very tasteful lens as an element in the whole combination. Ray trace could never accomplish that.

[Ed. Note: Another mystery clarified. I'd heard about Nikon's OPTIA system a couple of years ago, but didn't really understand what it was. The publicly-available information talked about "wavefront modeling" or some such. From this, I had the impression that it was primarily a new simulation technique, some alternative to the ray-tracing algorithms that were commonly used in optical design. From Yamamoto-san's comments, though, it seems that the most important part of OPTIA is its measurement capability, an ability to much more fully characterize the individual lens elements than was previously possible. This enhanced understanding of the individual lens elements then plays into more advanced simulation software, but Yamamoto-san emphasized in his remarks that it was the measurement or optical characterization capability that was the most significant part of the advancement.

This was a new perspective on OPTIA for me, but I confess that I still don't remotely understand what it's all about. It seems to me that ray-tracing could ultimately fully determine the behavior of a lens, whether for in-focus or out-of-focus cases. Ditto chromatic aberration, geometric distortion, etc. After all, light rays are refracted through a lens element in a very deterministic fashion, depending on where they're originating from, whether within the plane of focus or either in front of or behind it, etc. My sense, though, is that representing the totality of a lens's optical behavior through ray-tracing (including bokeh effects) would be extraordinarily laborious, or at least insanely compute-intensive. Perhaps the key innovation with OPTIA is a way to characterize a lens element's behavior in a much more compact way.

As noted, I feel that there's still some fundamental piece missing in my understanding . The fact that Nikon refers to it as "wavefront aberration" measurement implies a completely different dimension or coordinate space than conventional lens characterization. I guess deeper details will have to wait until a time comes when I can interview the OPTIA system engineers themselves :-) In the meantime, it does sound like Nikon has a unique system that lets them more easily model bokeh, micro-contrast and other subtle characteristics of complex lens systems.]

DE: OK, I'll switch to the DL cameras now, to perhaps let someone else answer and give Yamamoto-san a rest. <laughter in the room>

We find the new DL cameras very interesting. They represent an entirely new market segment for Nikon, and you've entered it with a more varied line than any of your competition. These days, my sense is that companies tend to move gradually when expanding product lines, but you jumped right in with three very different models in the same general market space. That seems like a bold move. What can you tell us about your strategy in releasing all three models at once?

Nikon: First of all, and you may have heard this in the press release, the target customer for the DL series is the DSLR user. Our intent of launching this series was that we wanted to provide cameras for DSLR users with high performance, good lenses, and also good portability, 24/7. In order to meet these various needs, we knew that we had to provide various types of lenses, in a wide assortment. So that's why we had to launch three very different cameras, to cover these various needs.

DE: At the low end, the DL 24-85 seems to be priced pretty aggressively. It's $200 less than the Sony RX100 III, and $50 less than the Canon G7X II. These are both very strong products. Currently, the original G7X and Sony RX100 III are the number two and number four cameras on our site. What was your strategy in attacking these models? What do you see as the DL 24-85's key strengths?

Nikon: We agree with you that it's very competitive in the market because of two strengths. To answer your question, one key point is the lens performance, which we feel is very good. The other is moving subjects. The DL24-85 captures them very well, with a burst capture rate of 20 frames per second, which is a spec that no other rival has.

DE: And that's 20 FPS at full resolution?

Nikon: Yes.

DE: So you view lens quality and high capture speed are the two strengths, yes.

Nikon: And one more, excuse me. The SnapBridge function.

DE: Ah, SnapBridge, yes, that's very significant. I haven't tried it yet, but I think that's very important. [Ed. Note: Snapbridge is Nikon's latest connectivity solution, intended to make it very easy to connect its cameras to smartphones, and share the images. My early impression is that it does indeed go a long ways towards making the integration between camera and phone seamless. As noted, though, I haven't played with it myself yet, so take that impression with the appropriate grain of salt.] The 18-50 is an unusual entry. It's currently the widest-angle offering in the 1-inch compact category. With such a strong focus on wide angle, it's something of a special interest camera. What led you to take such a different path from the rest of the field?

Nikon: We thought that this appeal point of having wide angle capability was an important one to nail, because many of the DSLR users -- again, that's our target for that whole series -- want to carry around a camera that has wide angle all the time, like 18mm.

DE: So really, the focus on the DSLR user is what led you to the wide angle?

Nikon: Yes.

DE: Very interesting. I'm curious, what are your expectations for the 18-50 in relative volume, compared to the other models? How do you think the volume will be distributed?

Nikon: This would be the ratio: if the volume for this is one, two for this, and one for this. (He was gesturing to the cameras as he spoke. The ratio was 1x for the 18-50mm, 2x for the 24-85, and 1x for the 24-500mm version.)

DE: Ah, I see. It's interesting to me that you expect the 18-50mm to be as popular as the 24-500mm. On the other hand, I can very easily see that; that wide a range of focal lengths with an aperture ranging from f/1.8-2.8 would be very appealing to a lot of our readers! Unfortunately, looking at my computer, I see that we're actually about out of time. Thank you very much for meeting with me!

Nikon(group): Thank you!