Competition grows, Sony reacts: 12 more lenses soon, AI autofocus & more

posted Thursday, September 27, 2018 at 6:52 AM EST

Clearly feeling the heat as Canon and Nikon enter the full-frame mirrorless space, Sony's Kenji Tanaka took the stage this morning ahead of the Photokina trade show to talk about the company’s mirrorless strategy. As Senior General Manager of Business Unit 1, Digital Imaging Group, Imaging Products and Solutions Sector at Sony Corp., Tanaka-san is responsible for the company's interchangeable-lens camera strategy. As you'd expect, he was keen to score points relative to its new rivals' recent entries into the field, and so played up places where Sony has an advantage.

The main message? Sony has been doing this a long time, and has more technology than anyone.

Sony has the broadest lineup

Starting out, Tanaka-san pointed to the breadth of Sony's current lineup, with no less than nine full-frame mirrorless models and 48 native lenses, spanning full- and sub-frame designs. While giving no time frame, he also announced that Sony would be adding 12 additional native lenses "in the near future" for a total of 60, with ongoing development beyond that.

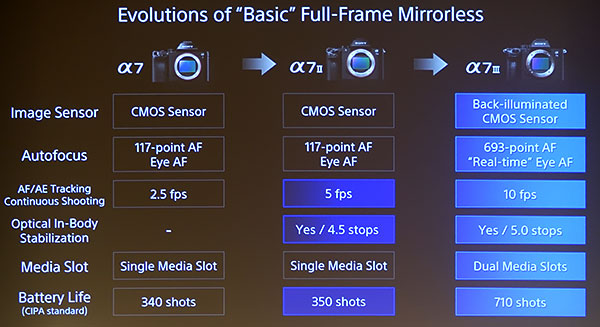

The breadth of Sony's full-frame lineup is a clear advantage for them. Models range from the original A7 -- still on the market and now costing just $1,000 for a full-frame camera/lens kit -- to the A9 for high-speed sports shooting, the A7R III for high resolution, and the A7S II for high sensitivity work, expanding the envelope significantly. No other maker has nearly this broad a lineup.

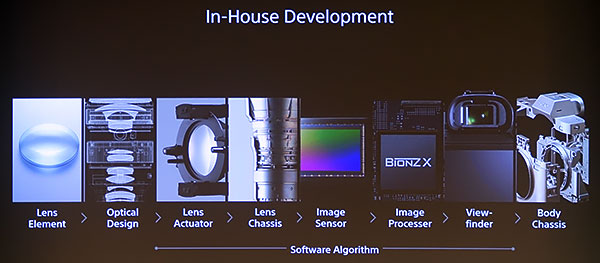

Sony is the only fully-integrated company

Tanaka-san also repeated a refrain that we've heard before from him, namely that Sony is the only fully-integrated company in the camera business, using its own technology and manufacturing to produce all elements of a camera system, from optics to actuators to sensors to processors to displays.

The point he makes is that in having all those technologies available in-house, Sony can innovate and develop them together in an integrated fashion, instead of just combining component parts. (Other manufacturers can access Sony sensors and electronic viewfinders, for instance, but lack the ability to deeply integrate sensors and processors the way that Sony can.)

(Interestingly, I learned that in addition to its huge sensor business, Sony is the dominant provider of EVFs for the camera business as well.)

One flange to rule them all

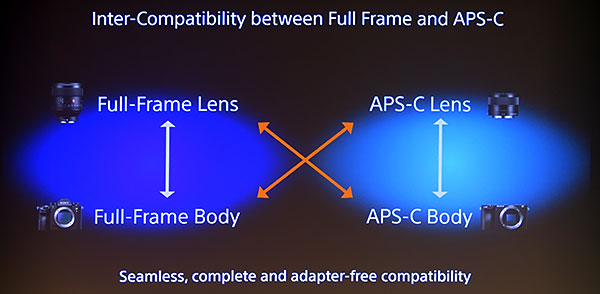

Apologies for the overreach there trying for a Lord of the Rings reference, but I think one of the most important points Tanaka-san made in his presentation was that Sony's system involves only one flange type, whether you're talking entry-level sub-frame or extreme high-end full-frame. This means that people buying into the system can invest in a relatively inexpensive APS-C body, but buy Sony's high-end lenses to use with it.

It's no secret that lenses have a lot longer useful life than camera bodies, so the adage of "buy your last lens first" applies. If you're committed to your photo passion, you can invest in high-quality glass from the get-go, and it'll still be the best of the best when you upgrade your camera body. With both Canon and Nikon, their best lenses will only be usable on their new mirrorless bodies, because there's no way to adapt a lens with a shorter flange distance onto a camera with a longer one. (At least, other than adapters with optics built into them, but those are pretty expensive in themselves, and it'll likely take a while before they’re available to convert from Nikon Z- to F-mount or Canon RF- to EF-mount.)

Size isn't everything :-)

Nikon and Canon have been playing up the advantages of large lens mounts lately, calling attention to the optical advantages of their new, very large-diameter mounts. Sony's response to this was terse, asking the question "Does a large-aperture, high-quality lens require a large mount diameter?", to which they replied "No."

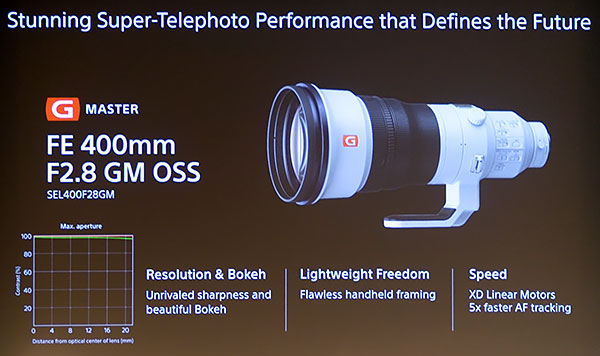

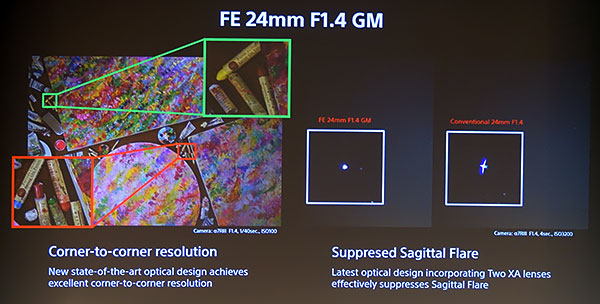

To back this up, Tanaka talked about Sony’s 400mm f/2.8 super-tele and just-announced 24mm f/1.4 G Master lenses. An MTF diagram that was flashed on the screen for the 400mm f/2.8 looked extremely impressive, but wasn't labeled to say what lines/mm the curves were drawn for. Still, there's no question that it's a quality optic, and the 24 f/1.4's performance seems exceptional too, from our early experience with it.

It'll be interesting to see how this plays out. Personally, I think what it boils down to is that larger flange diameters make it easier for optical engineers to deal with aberrations, but there isn't any sort of an absolute limit involved anywhere: If you throw enough lens elements with fancy enough aspheric surfaces and exotic glass at the problem, you can make a fantastic lens.

And Sony seems to have done just that with both the 400 mm f/2.8 and 24mm f/1.4 lenses, pending final judgement from lab and more extensive field tests. What will be interesting to track is whether "easier to design and build" translates into better bang for your buck in terms of how much you have to spend to get a lens of a given quality.

As with most things, flange diameter likely ends up being a matter of degree, and just one of the factors in lens design and manufacture. I think it's a significant factor, but Tanaka-san successfully made the point that it's not some sort of absolute limiting factor.

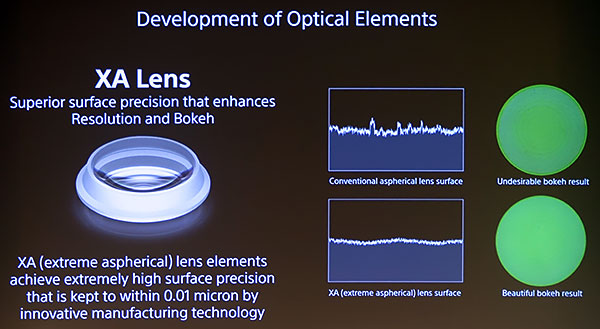

Specific tech: XA Optics

Sony seems particularly proud of its new "XA" optics, as seen in both their 400mm f/2.8 super-tele and the new 24mm f/1.4 wide-angle prime. The key to XA optics is that they're molded aspherics with unusually smooth surface profiles, having surface roughness less than 0.01um. This avoids so-called onion-ring bokeh, and thus lets lens engineers use aspherics more freely in their designs without risking ugly bokeh effects.

This technology seems similar to what I saw several years ago in a Panasonic factory, but wasn't able to report on at the time. Aspheric molds are created on computer-controlled lathes, using single-point diamond cutting heads. This leaves a microscopic, spiral groove on the mold surface that's transferred to the glass elements during the molding process, and the resulting ripples can cause onion-ring bokeh if the aspheric surface happens to be at the wrong place in the optical formula. Techniques are doubtless different between manufacturers, but polishing-out the microscopic grooves on the aspheric mold blanks produces smoother glass elements, and much better-looking bokeh.

Regardless of how they do it or who did it first, the 0.01 micron spec is pretty mind-boggling. That's only 10 nanometers, or about 1/50th of the wavelength of green light. I'm amazed that they even need to control the surface profiles to that degree to control onion-ring bokeh, let alone that they've achieved it.

AI Autofocus

You heard it here first: artificial intelligence is going to be *the* big thing in autofocus over the next few years. I've heard this from several manufacturers now, but Sony seems to be leading the pack. Of course,*everything* is "AI" now, just like everything was "nano" a couple of years ago, and there's a lot of confusion over exactly what that means.

When it comes to autofocus, though, the bottom line is that there's a lot of room for cameras to get smarter in understanding what the subject of a photo is. A *lot* of room. And that's what AI autofocus is all about. It turns out that Sony's celebrated and incredibly useful Eye AF is based on an AI-type approach, and in the strategy briefing, they showed multiple examples of eye-detect working with animals as well.

Talking with execs later, it turns out that detecting eyes on animals is waaaay harder than with humans, because there's so much variation in animals' "faces". With humans, you can pretty much count on a basic shape, the eyes being spaced a certain distance apart, having a particular relationship to the nose and mouth, etc. (And I imagine eyebrows are pretty helpful too.) But with animals, how do you relate a cat to a dog to a bird? There's not a lot in common, but it's trivial for a human brain to tell where the eye is.

Along the way, Tanaka-san made the point about how much Sony's Eye AF has advanced over the years, in that it now works with glasses, from the side and for backlit faces. Oh, and it works with continuous autofocus, as well... :-0

Finally, while it's AI-based, Tanaka said that Sony actually has a dedicated hardware engine for real-time human eye detection. I found this especially interesting, that they'd have special hardware dedicated to finding eyes.

Eye AF is just one example; AI is *the* big untold story in autofocus these days. (If I can find time, there's an article waiting to be written there.)

I'll have a bit more on this whenever I can manage to write up my subsequent one-on-one interview with Tanaka-san. Sony's tech runs deep, and as I've suspected in the past, their stacked-sensor technology (particularly the version with memory and processing elements being part of the stack) has a lot to do with their AF prowess.

Summary

It was an interesting and well-done presentation overall. With Canon, Nikon, and now Panasonic and Sigma too all entering or announcing their imminent entry into the full-frame mirrorless world, Sony is suddenly feeling the heat and has strong competition where there was none before. So they need to change their messaging and up their marketing game if they want to maintain the growth they've enjoyed over the last few years.

But as Tanaka-san pointed out, Sony does in fact have a lead in a number of areas, and some unique technology to apply. One thing's certain, though: With all the major competition now in the market, there's never been a better time to be a photographer!