Sony Q&A @ CP+ 2019: The future of AI autofocus; what else is next. Plus, expect more APS-C bodies!

posted Friday, March 22, 2019 at 11:27 PM EDT

I had a chance to sit down with Kenji Tanaka from Sony, as part of my usual round of interviews at this year's CP+ 2019 trade show in Yokohama. Tanaka-san is the Senior General Manager of Business Unit 1 of Sony Imaging Products and Solutions Inc's Digital Imaging Group. That's a typically Japanese-business-card mouthful, but he's basically the guy responsible for Sony's interchangeable-lens camera strategy. He also has a very strong engineering background, so has an excellent grasp of just what the technology can do now, and where it's going. All this makes him an excellent interview subject, and I think many of our readers will find what follows as interesting as I did while talking to him.

Read on for all the details, and feel free to chime in on the comments below, to share your thoughts on any and all topics discussed!

Dave Etchells/Imaging Resource: We'll start off with a question that we’re asking everyone: Canon's chairman and CEO Fuji Mitarai went on record recently as saying that he expected the interchangeable-lens camera business to contract by half over the next two years. Do you agree with this assessment? What are your projections for how the ILC market will evolve over the next two to five years?

Senior General Manager

Business Unit 1

Digital Imaging Group

Imaging Products and Solutions Sector

Sony Corp.

Kenji Tanaka/Sony: The worldwide ILC market overall was down about 8% on the unit shipment basis last year according to CIPA, but Sony's Alpha is not following that trend. Based on simple calculation, two years from now, the market will be less than 80% compared to now. But not half.

DE: Not by half. So it might be...

KT: But then, recently there has been a momentum of new cameras. One area is full-frame mirrorless, as you know well. And the full-frame mirrorless' growth is of course more than 100%. Almost more than 150%.

DE: You mean another 150% on top of the previous year, or do you mean 50% growth?

KT: 50% growth, yes.

DE: Hai.

KT: Another market movement comes from the smartphone users, especially in the movie area. As you know well, the smartphone business market is one billion units plus. [Ed. Note: Actually, per IDC it was 1.4 billion units in 2018.] Just one percent of that is the same amount as all of the ILC business. [Ed. Note: And perhaps even larger.] If just one percent of smartphone users and creators have an interest in ILCs, the business is going to double. Mitarai-san said the total camera business is going to be half, but I think of course, the situation could go both ways. We have both risk and opportunity sides. This is my understanding.

DE: Yeah, yeah. So there are big challenges. What are your plans to get more smartphone users to use ILCs?

KT: So our new model, the Alpha 6400, has a free-swiveling panel, a touch-panel, and it has a microphone jack. Of course, it's targeted for conventional still users, but at the same time, we're targeting movie creators. YouTube vloggers, that type of people who use smartphones.

DE: Right. Hai, hai. Sony has said for a long time that your aim is to grow the market, not just take sales from Canon and Nikon, but the overall market is still decreasing. So currently, your growth is still really coming at the expense of Nikon and Canon, I think. To some extent, we already discussed this [with his comments about the overall market, vs Sony's own growth], but I guess I could ask: Smartphone users are going to be accustomed to a very small device they can just put in their pocket. How many will be willing to take on a larger device?

KT: Mmm.

DE: Your full-frames are very compact but still, it's a much bigger system. The A6400, as you said, is aimed at the smartphone users, but it seems overall if you want to capture more of that market, you need to focus much more on APS-C, no?

KT: Yeah, I think so. Sorry, I think the last two years, already we asked for patience. Many [media outlets have asked]...

DE: ...whether you're neglecting APS-C...

KT: But I said that, no, for us that market is very important. But before that when we had things to do [Ed. Note: that is, priorities to set], that was the full-frame. Because full-frame for the brand, a kind of symbolic thing.

DE: Mmm.

KT: Because before APS-C, we had to create the symbolic brand or symbolic products. So we first started to create the full-frame mirrorless.

DE: Yeah, yeah, yeah.

KT: But yeah, as I said before, our target is to increase the camera business as a whole. So right now, we are [proceeding to the] second step, that is APS-C. First of all, we released the A6400.

DE: Mmm, so the A6400 is the first product of the new line. There are enthusiasts who want APS-C, and it's been a very, very long time since you had a high-end APS-C camera. Way back, a long time ago, you had the NEX-3, the NEX-5 and the NEX-7. I think for the enthusiasts, the A6400 was a good sign, but it still feels like it's not for us, or not for enthusiast still-shooters.

KT: Yeah, I think you're right, and we have the opportunity to develop a type of enthusiast APS-C model, and the enthusiasts, especially the US ones are waiting for this type of model. But you know, last year, all the owners, especially at the US market, we conducted a market experiment, and for Alpha 7 Mark II, we kept the price at US$999. And I want you to know that the result was more than our expectation. That means that many enthusiast customers bought the Alpha 7 Mark II, and the Alpha 7 Mark II is a full-frame model, so my understanding is that somehow the customers are overlapped between high-end APS-C and entry-level full-frame.

DE: Yeah, yeah.

KT: So which way is the best for us, now I am thinking.

DE: Yeah.

KT: Looking at Canon, and they launched EOS RP. And looking at Fuji, they are trying to create the high-end APS-C. The market looks different, but the customer somehow overlapped. Which way or both ways is good for Sony, its now I'm thinking. But technically, we can do both. <laughs> Of course.

DE: Yeah, it's interesting that Sony managed to bring down the price to US$999. That's a very significant price-point, but I think it was an older model and it wasn't advertised a lot any more. But even with that, you saw a big increase in sales, you said, more than your expectations?

KT: Mmm.

DE: Related to that, I know just from our reader activity that Canon's EOS RP has drawn a lot of attention, I think partly because of its price-point. But it admittedly also has had the advantage that it's a new model, a new announcement.

KT: I have seen those lenses. So the total cost for both the body and lens, that is very important.

DE: Yes, it's interesting because Canon has taken the strategy of very high-end lenses, but mid-to-low end bodies. I think a big factor that has a lot of impact for people is that through the end of March they are including the mount adaptor for free, and there are many, many, many existing Canon users. Also, even for someone who is not on the platform already, there are many, many EF lenses available on the used market. You go on eBay, you can find lots of lenses, many of them very inexpensively. They may not be the highest performance compared to an RF lens, but I think that they'll be a "good enough" scenario for many people, and I think that if people looking at the platform, then can see it's very, very affordable with EF-mount.

KT: Mmm, mmm, mmm.

DE: Yeah, I wouldn't want to be in your shoes, having to decide between high-end APS-C versus low-end full-frame. ...So, you recently showed off your real-time tracking...

KT: Mmm!

DE: ...and the A6400; it was good for single-shot, but continuous shooting is difficult. The Sony A9, though, was very high-performing. We've been told that that technology involves AI, but it's not clear really how, or what aspects of AI it uses. Olympus, with the E-M1X, uses AI with very different kinds of subjects. You know, cars, trains, planes. What type of practical improvements do you think we might see in autofocus in additional firmware updates on the A9?

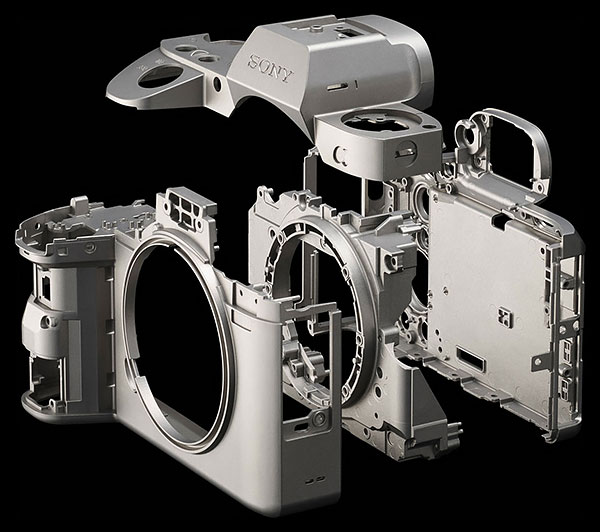

KT: First of all, before talking about AI, I think the most important thing is our high-speed platform, no? On the top of a high speed platform, we can create AI, but if the platform is slow, the moving subject will be gone! But our platform has a very high speed. That is our special talent or unique technology.Then, on top of this high-speed platform, we have an AI engine.

DE: Mmm-hmm.

KT: As the first step, we investigated what type of subject people realistically are shooting right now. The most [common] subject is the eye. And another one is animals, and the birds, of course the car kind of thing, that kind of an object, we can create the using deep learning. Just setup a dictionary on top of our high-speed platform, AI can calculate the subject position.

DE: Mmm, mmm.

KT: So the first step is [recognizing] eyes, but of course, we will expand the subject types. Right now I cannot tell you, but we already have a kind of organization, to create a dictionary.

[Ed. Note: This is significant, that he cited an organizational structure specifically created to build AI dictionaries for autofocus. As I noted, developing AI dictionaries or data clouds for object-recognition is a huge effort, requiring thousands and thousands of tagged images. It's a fair bet that every major camera company is working hard on this, but it seems significant that Tanaka-san called it out as a separate organizational structure.]

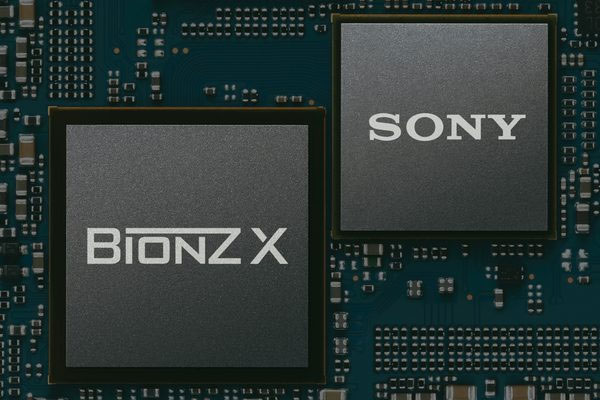

DE: Ah, interesting! I think that in your architecture, in a previous conversation, I heard that there is specific hardware for executing deep-learning type algorithms in there. It doesn't just rely on a general-purpose processor, and that may be a difference for your AI versus what Olympus is doing. They are using the general-purpose CPU to execute AI in the E-M1X. (That's OK for me to say, because it was in an interview.)

KT: <laughs>

DE: Yeah, they explained that they felt their algorithms were changing so much that they didn't feel it was practical to build special-purpose hardware. They needed more general-purpose processing, and so that's why they have two four-core chips in E-M1X. I guess it could be either a good thing or a limitation, but it seems to me to be good to have dedicated hardware for executing deep-learning. Those dictionaries for deep learning, though, need to be loaded onto a camera. From what I understand, those are very large chunks of data; I think on the order of gigabytes.

KT: Mmm.

DE: Is there room? In A9, do you have space...

KT: Talking about dictionary size, it's actually not a big deal. We can have several. But now, my concern is how to connect or how to realize the relationship of each dictionary. Of course, the first step is that the customer chooses eye AI autofocus from the menu, or changes to animals. If the human user chooses the dictionary type this way, it is OK. But in the final version, the customer [can't] need to choose the dictionary every time. Dictionary-changing automatically, that type of thing is very hard, you know. We cannot do that. So the size of dictionary is not a big deal, but if there are 10 or 100 dictionaries, how to choose just one?

DE: Ah, right. Because the camera has to already have that specific dictionary loaded, in order to recognize that a particular type of object is there.

KT: Yeah.

DE: So if you have the human eye dictionary loaded and there's a racecar, it's not going to say "Oh, that's a racecar!" Because the dictionary is what lets it recognize human eyes.

KT: Of course, the human user can choose one dictionary out of 100, but it takes too long.

WB: And then if the camera tries to automatically do it, you're saying that's...

KT: Yeah, yeah. That kind of technology we need, but right now we don't have it. But we want to do such a kind of thing. So the number of dictionaries should increase.

DE: Yeah.

KT: 10, 20, 30, 40, 100, 1,000 [dictionaries] maybe. And we can store those in memory. But how to choose? That kind of thing's very difficult. So our dictionary currently handles eye autofocus and animals. Maybe we can add more, the next, next, next. Three is OK. Four is OK. But five, ten?

DE: Yeah, will get a cumbersome for the user to choose. I think, though, that within a given situation, for instance cars, you're at a racetrack, so it's OK to select cars. Someone else might be taking pictures of birds, so they can select birds. It's unlikely that a person shooting the auto race would suddenly want to take a picture of a bird, so that kind of manual selection is OK. But maybe too, though, the person at the racetrack might want to take a picture of a driver, and all of a sudden they'd need eye AF.

WB: Yeah. Hmm.

DE: And so yeah, to switch automatically... It's interesting, though, that you said the size of the dictionary in the camera is not very big. Generally, my understanding was that it's very large chunk of data, like maybe a gigabyte of data for one dictionary.

KT: I don't think so.

DE: Huh, that's interesting. So relative to your products, the A9 has room for more dictionaries and you are continuing to develop them...

KT: Yes. [Ed. Note: This bit is particularly interesting, as it suggests that Sony is using a fundamentally different approach for its AI AF than Olympus is. In the case of Olympus, while the engineers weren't very specific, they did say that the data "clouds" for the different subject types in their AI system were quite large. I wonder how the two approaches differ, that there can be such a great difference in the size of the data clouds or dictionaries, and how those difference might translate into real-world functionality?]

DE: ...and then it's just a matter, as you get many of them, of how do you select the right one. Very interesting.

KT: Yeah. From last year we said that speed and AI were key... Of course speed like the Alpha 9, with the stacked image sensor; we've focused on that kind of high-speed technology and then at the same time, AI is our focus point.

DE: So it's like you have done the speed part with A9, and now you're focusing on "What can we build on top of that"?

KT: Yes, exactly.

DE: Sometimes I say that consumers need even better autofocus than professionals, because they need the camera to do more for them. But the high-speed sensor technology is very expensive. As I understand, the A6400 processor is the same or similar to A9, but the sensor is much, much slower. Is that fair to say?

KT: The processor is the same generation as in the Alpha 9. So the processor performance is enough. [Ed. Note: At first I heard this as meaning that it was the same processor in the $900 A6400 as in the $4,000 A9, but when we checked with Sony, what was said was that the A6400 processor is just the same-generation, versus being the identical chip as in the A9. Still, the point is that the basic processor in the A6400 has the ability to process image information and perform AI-based AF calculations very rapidly.]

DE: Ah, the same. So that's why the A6400's tracking in preview mode is very fast and basically real-time, and it can grab a single shot just fine, but then in continuous shooting it starts lagging. That's because of the sensor.

KT: Yeah. So only the Alpha 9 has a blackout-free function. That comes from the stacked image sensor.

DE: As time goes on, will the cost of stacked technology come down enough that it will fit cost-wise into consumer cameras?

KT: Can it come down enough? <laughs> Unfortunately, the stacked image sensor is still very high-price. This is my personal opinion. In the semiconductor business, if we use a lot of image sensors, the bulk price is going to go down. That is a theory of the semiconductor business. Volume covers the price.

DE: Yes. And of course, the stacked is just a more complicated technology. There's a lot going on there ,so it's going to be always more expensive than not stacked. But on the other hand, the cell-phone sensors that Sony makes use stacked technology, so that also helps drive the cost down. It sounds like, really, there's no way to tell how much it's going to come down over time, and whether that will be enough for entry-level models too.

KT: Well, this is another area, but looking at the 4K TV, the price! That kind of thing, if the mass production is running very well, the price is going to go down. [Ed. Note: I had no idea just how cheap 4K TVs had gotten. I checked Amazon, and you can even get an off-brand 39 inch TV for as little as $150! :-0]

KT: You know, only Alpha 9 has the stacked image sensor. And so right now we're still learning how to decrease the price, but we need higher sales volume, yeah.

DE: Yeah. So the cellphone people have extremely high volume, but they're tiny little sensors and so when it comes to full-frame sensor volume with the stacked technology, it's very small volume compared to cellphones. I will just tell our readers to buy hundreds of thousands of A9s, and then the price will come down for everybody. :-)

<much laughter>

DE: This is also about AI, but it's a very open-ended question. We're focusing -- no pun intended -- on AI for autofocus. Do you think AI technology has other applications inside cameras?

KT: Yeah, I think so. Especially we are thinking to study computational photography [applications]. In the smartphone market, I think computational photography is one way to create a new [better] image. That kind of thing, of course we can do too, I mean the ILC (interchangeable-lens cameras can do [too]. So now we are interested in computational photography.

DE: Mmm, mmm.

KT: A long, long time ago, when I was an engineer, I visited SIGGRAPH.

DE: Oh, SIGGRAPH, yeah, long time ago. <chuckles> [Ed. Note: It has been a long time since Dave was in the part of the imaging business that SIGGRAPH is relevant to, but the show continues to this day; it's next scheduled for July 28-Aug 1 in Los Angeles.]

KT: Yeah, more than 20 years ago. At that time I was very interested in computational photography type of things, and neural networks. It was the very beginning of neural networks.

DE: Mmm, mmm.

KT: So that kind of the technology continued to grow, continued to easier to handle. So maybe computational photography will be the way for the next generation, [the way to attract customers to] our ILC cameras. So not just now, but [seeing it grow for] more than 20 years consecutively, I'm very positive about computational photography.

DE: Yeah, it seems like many things having to do with improving the images that AI might be applied to. I don't know, maybe cropping, finding subjects, extracting 3D information from shading would be big.

KT: Yes.

DE: We're probably about out of time, no?

Sony PR: One more question.

DE: Last question, lots of pressure now!

KT: <laughs>

DE: One of my editors asks about products relative to the Tokyo Summer Games, because you have the 400mm f/2.8. I know you can't say anything about new products coming, so... <laughs> [Ed. Note: As we noted recently in another interview, the International Olympic Committee is extremely protective of the "Olympics" brand, so non-sponsors and non-advertisers can't use the term "Olympic Games", but have to resort to alternative terms like the "Summer Games" instead.]

<much laughter>

DE: ...I can't ask that.

KT: Well, but we're implying many things. Of course we are trying to expand the APS-C series of cameras. That maybe implies not only one product. And we implied we are working on the sports area, that implied not only a 400mm f/2.8 lens is coming. So we are implying many things, but not directly saying "next model is this one, the next model is this one". But we're focusing on sports and we're focusing on the APS-C area.

DE: So it seems safe to say things coming.

DE: Ah, and your focus on both growing APS-C and doing more for sports photography, as in the 400/2.8 also touches on your one-mount philosophy.

KT: Yes, we have a one-mount strategy. So we are trying to increase our APS-C customers; it's very easy for people to go up and down [between sensor sizes]. Full-frame users can easily use a sub camera, and our APS-C camera customers can very easily go up to our full-frame mirrorless.

DE: Yeah; if they want, they can buy a full-frame lens, use it on their APS-C camera, and it's a completely capable full-frame lens, for when they want to move up to a bigger sensor. And full-frame photographers can easily carry a second APS-C body, without worrying about mount adapters.

KT: This is what we want to communicate.

DE: Yes, the single mount across all of your products is something that's unique to Sony... Well, it looks like we're out of time, thank you very much for all your answers!

KT: Thank you.

Summary

Wow, there's lots of notable stuff here...

The first is that Tanaka-san does not fully agree with Canon CEO Mitarai-san's gloomy assessment for interchangeable-lens cameras, although his assessment is more equivocal than those of some other execs we talked to. The wild card is to what extent Sony or other camera companies can convince even a small percentage of the billions of smartphone users to upgrade to a "real" camera; even an almost vanishing percentage of smartphone users deciding to step up would easily double total ILC sales.

We talked some both early on and later about Sony's APS-C cameras, and their latest A6400 model. Significantly here, Tanaka-san said that they were indeed focused on their APS-C lineup, and that they view it as strategically important for increasing their market reach. While as always declining to comment on specific models, Tanaka-san went further than most execs to observe that they were strongly implying that there would be more APS-C bodies coming. (As well as more sports-oriented large-aperture lenses like their incredible 400mm f/2.8.)

As any of you who've read more than a few of our executive interviews will know, it's very rare for camera company execs to give any indication at all of future product plans, so simply the fact that Tanaka-san chose to make as strong a point as he did about the likelihood of forthcoming APS-C models struck me as a particularly positive indication.

Then there's the whole area of AI-based autofocus, a very hot area in the industry as a whole lately, and one in which Sony's been working hard for several years now. (Their extremely effective Eye AF was among the first fruits of these efforts.) There were two significant pieces of information here. First, that Sony's AI AF technology doesn't seem to need the huge "data clouds" that I'm accustomed to hearing about, associated with each subject type. The amount of storage space required for what Sony calls AF "dictionaries" isn't the issue; Tanaka-san's concerns are more about user-interface issues. It's no problem having the user select the subject type you want if there are just two or three types, but what do you do if there are 10, 20 or more different AI AF subject categories? But the camera can't do the subject-switching itself, because it needs to have a given dictionary loaded in order to be able to recognize that subject type. So if you have the "cars" dictionary loaded, it wouldn't be able to recognize that it needed to switch to eye-based AF if you decided you wanted to snap a picture of one of the drivers. That said, though, a camera like the A9 apparently has plenty of space in its memory to hold multiple subject dictionaries.

The second significant revelation about AI AF within Sony is that they have an organizational structure set up specifically to create new AF subject dictionaries. This is encouraging, in that it suggests we'll be seeing more subject types rolling out going forward, in addition to the Eye AF and Animal Eye AF types we have currently.

Of course, I'm sure the other companies have similar ongoing efforts, particularly Olympus, who's also said explicitly that more subject types will be coming. Given that AI AF is such a hot area, and one of the few areas by which cameras can differentiate themselves these days, I expect to see a real footrace between all the players, looking to one-up each other with AI AF subject types. (Face it, current raw image quality is pretty darn good all around; there aren't going to be any 2x improvements in that area in any foreseeable time frame, without some entirely new sensor technology. We're up against basic limits of physics, when it comes to silicon sensors.) So stay tuned, we're going to be seeing a lot of AI AF activity over the next year or two, and Sony has set up a specific structure within their organization just to develop new subject types.

But AI AF needs a fast underlying platform to work from. Sony has this with the image processor in their A9 and A6400 (with the version in the A6400 apparently very close in ability to that of the A9), and in their A9 with it's stacked-CMOS sensor design. A big question is whether and/or when they'll come down in price enough to fit into an entry-level price structure. (Tanaka-san cited the example of 4K TVs. They involve not only a lot of complex circuitry but huge display panels as well, and you can buy a pretty decent one these days for $200-300.) As I noted in the piece, if even 1% of IR's total readers went out and bought an A9, we'd have cheap stacked sensors next year. (Please use our affiliate links when you do, though ;-)

One of the next big frontiers Tanaka-san sees for camera technology is so-called computational photography. This is a pretty broad term, that can include anything from basic image enhancement to 3D shape extraction, just based on object shading. He's been watching the whole area for 20 years now, and expects it's going to have a real impact on camera technology in years to come.

Finally, there's a nod to Sony's "one mount" philosophy, meaning that all of their camera models use a single lens mount, so users can move freely back and forth between full-frame and crop-frame bodies, without having to worry about lens adapters. One practical impact of this is that someone can start out with a Sony APS-C body, but buy their latest FE (full-frame) lenses to use with it. Then, if they eventually move up to a full-frame body, the FE lenses they've been using with their crop-frame body will work just fine. Likewise, full-frame shooters can easily add an APS-C body to their kit, and swap lenses back and forth however they like. While other manufacturers have good forward-compatibility (older lenses will work just fine on their new mirrorless bodies, via adapters), there's no backward-compatibility, that would let you use the latest mirrorless glass on older DSLR bodies.

As always, a great interview; Tanaka-san is the guy inside Sony when it comes to future ILC development, and also has a very strong engineering background, so his comments have a lot of depth and carry a lot of weight...