500 Newsletters later, there’s never been a better time to be a photographer!

posted Thursday, June 24, 2021 at 5:00 PM EST

Wow, 500 newsletter issues, the first one dating back to September 16, 1999… That surely takes me back! It's beyond amazing, to see how far the photography world has come in that time. (Ai yi yi, that's almost 22 years ago; can I really be that old? - Don't answer that… :-)

That first issue wondered if it might be a "2 megapixel Christmas", referring to the "magical … 2-megapixel resolution barrier" Yeah, a whole two megapixels. - But did you really need it? After all, you could get a nice little 1.3-megapixel camera for only $299! Sure, the Fuji MX-1200 only had a 2x digital zoom, but you could get a 1.3-megapixel camera with a real 3x optical zoom for only $499, in the Olympus D450 Zoom.

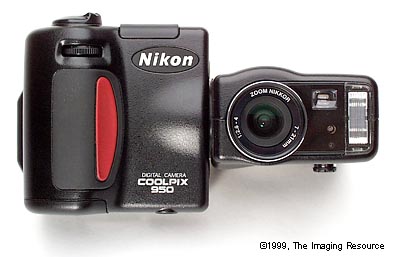

If you just *had* to have a 2-megapixel model, though, you could get the class-leading Nikon Coolpix 950 for a mere $1,199…

Looking back, it's literally incredible (in the fullest sense of the word) to see how far cameras and photography have come since our first newsletter issue, and megapixels-per-dollar are actually the smallest part of the story. It's a tale of rapid progress on multiple fronts, involving sensors, image-processing chips and optics. The technologies are so interrelated that it's hard to arrange things in neat categories, but here are what I see as some key developments along the way.

High-ISO performance

Early on, this was one of the biggest challenges: Digital cameras were great in full sunlight, while indoor or nighttime shooting was a whole other story. The Nikon Coolpix 950 topped-out at ISO 320, but did surprisingly well at that level for a camera of its era; a 1.4 second shot of a typical urban night scene was noisy but usable.

It was probably slightly better at a pixel level than a Sony A7R IV at ISO 3200 and 3.2 seconds, but take into consideration the extremely low light level of the latter (1/228th the light level, or nearly 8 stops darker), not to mention the 61 megapixels in the image. While the A7R IV is a top-of-the-line mirrorless model, the body-only price (admittedly slightly unfair in that regard) is only about 50% more expensive in constant dollars. ($1,200 in 1999 is about the same as $2,000 today; I picked the A7R IV for the comparison simply because I could quickly find a very low-light shot from it)

Today's amazing high-ISO performance is the result of advances in both sensor tech and image processing, with the latter arguably accounting for more of the improvement.

Autofocus

1999's cameras were painfully slow to focus, and had a hard time coping with subject movement, dim lighting, or especially a combination of the two. All of them used simple "contrast-detect" focusing, while almost all current cameras use some form of on-chip phase-detect, and can work to amazingly low light levels. Likewise, most have AF pixels spread across most of the sensor's surface, so they can focus on subjects almost anywhere in the frame.

I think it was Fujifilm who first brought on-sensor phase-detect AF to digital cameras, with their F300 EXR and Z800 EXR models in August of 2010. The technology slowly spread to other manufacturers, and today is nearly universal.

Autofocus is about a lot more than just the underlying technology, though; much of the magic has to do with how the camera uses the raw focus information to find and lock onto the subject. For a long while, traditional camera makers like Nikon and Canon ruled the roost, thanks to their decades-deep technology and huge scene databases, used to identify different focus scenarios and subject types. As time went on, though, other companies came up to speed and now battle on equal footing with the legacy giants.

Autofocus is endlessly complex, as it's not only about identifying the subject, but staying locked onto it as it moves around the frame, changes its distance, is momentarily obscured by foreground objects, or even briefly passes outside the frame and back in again. Recognizing objects is something we humans do literally without thinking, but it takes a lot of processing and cleverness to make a camera do so.

The first step in this direction was face-detection, which I think was again first brought to market by Fujifilm, in their S6500fd, launched in July 2006. Fujifilm was in a unique position early on, thanks to having used face-detection technology in their digital minilab systems, as an aid to exposure and color management. As with phase-detection AF, the technology quickly spread to other manufacturers, powered in large part by tech from the Irish-Israeli company FotoNation. (At one point, two-thirds of the world's digital cameras used FotoNation technology.)

Whether historically or using modern AI-based techniques, the main challenge of AF is to "teach" the camera what different subject types look like. (For an interesting inside look, see my interview with Ogawa-san from Olympus, from the 2017 CP+ show. He talks about how Olympus identified 18 different scene/subject types, and then collected 10,000 - 20,000 individual shots for each type, to use in training their AF algorithms.

As far as AF has come, I think it's still the area that has the furthest yet to go, and with the most ultimate benefit to photographers. Nowadays, we're seeing a lot of AI deep learning technology being applied, to vastly improve cameras' abilities to "understand" different subject types with increasing specificity and precision. (One of the first and still one of the most dramatic uses of deep learning for autofocus came in Olympus' E-M1X model, with its special focus modes that could recognize planes, trains, automobiles and motorcycles. You can read more about it in my interview with key members of the R&D team that developed it, from January of 2019.)

Multi-shot modes: Best Shots, panoramas, Twilight Mode, super-res, focus stacking, etc...

Then there's the whole category of what I'll call multi-shot modes, where the camera combines information from multiple images to produce a final result. The key technologies here are fast sensor readout, fast processing and plenty of memory to hold the images and intermediate results.

There are almost too many to mention, but for myself personally, these have contributed more to the fun and usability of cameras more than almost anything else. I'll mention just a few of my favorites…

Nikon's Best Shot Selector

AFAIK, this was the first multi-shot mode. It was deceptively simple in how it worked, but made a huge difference for handheld low-light photography. The camera would shoot 5 or 6 separate images in rapid succession, and then save only the sharpest one to the memory card. How did the camera know which was the sharpest? Nikon never revealed the secret, but it's not too hard to figure out: The more detail there is in an image, the harder it is to compress it in JPEG. The "sharpest" or least-blurred image was simply the one with the most detail, and thus the largest JPEG file size. So all the camera had to do was JPEG-compress all the images and choose the one that ended up the largest. It was a simple bodge, but made it so much easier to deal with camera shake in long exposures.

Sony's "Handheld Twilight Mode"

This came later and was much more sophisticated, but had an even bigger impact on after-dark photography. Rather than a single, longer exposure, the camera would snap a series of shorter exposures, and then combine them together into the final image. Easily said, but not so easily done: Because the camera was moving during the exposure, each image would be slightly offset from the others. Sony's engineers used the camera's powerful image processor to analyze and micro-align all the images with each other, at a pixel or sub-pixel level. The result were sharp photos at equivalent shutter speeds a good 5x slower than you could reasonably hand-hold normally.

Panorama modes

Early cameras had modes that would help you line up multiple shots so you could stitch them together into a panorama on the computer later, but the real breakthrough here again came from Sony, with their "Sweep Panorama" mode. Just hold the camera out, press and hold the shutter button and "sweep" the camera in an arc. The camera would shoot continuously, then align and stitch all the shots together into the final photo. It wasn't always pixel-perfect, and things like subject motion would obviously cause stitching errors, but it was and is an enormously fun function to have available. (I've probably used it as much for vertical shots of waterfalls as I have for conventional landscape-oriented panoramas.)

Focus Stacking

I've used this less, but it's life-changing for macro photography. The camera takes multiple shots while racking the focus, then combines all the separate exposures, taking just the most sharply-focused portions of each. Again, much easier said than done, since the scale of each shot can be different, due to focus breathing in the lens.

Olympus' Live Composite mode

This is super-fun, and enables shots that would be difficult or impossible to get otherwise. Hold down the shutter button, and the camera will snap multiple photos, taking only the brightest parts of each to add to the image it's building. It's great for fireworks, but also super-handy for things like time exposures where you see car head/taillights blurred into streaks. It only occurred to me recently, but it should be great for taking photos of lighting bugs in the late twilight. It's just starting to be lighting bug season here in Georgia; maybe I'll go out shooting tonight!

I'm sure I've left out a lot of your own multi-shot favorites, but my main point is that there are entire types of photography that weren't practical or even possible without the kind of image processing power tucked inside our cameras.

Optics(!)

I gave this one an extra exclamation point, because modern lenses are so much better than anything available even as recently as 10 years ago. Even more than with cameras themselves, a whole range of technological advancements have combined to make the latest lenses light-years (ahem ;-) ahead of old-line classics.

The merging of image processing and optical design

I think it was Olympus, back with their original Four Thirds camera systems, who first took advantage of this. The eureka insight was that you could use the camera's image processor to correct for some forms of optical distortion and vignetting or shading, letting optical designers push the boundaries further in other aspects of lens design. Lenses could become faster, lighter and more distortion-free than before, often at a lower cost as well. This has become an essential factor in compact and cell phone cameras, but most people are unaware of it because it all happens "behind the curtain".

Computer ray-tracing and design optimization

It takes an enormous amount of computation to accurately analyze how well a given lens design works. The basic process is called ray-tracing, because it consists of literally tracing the path of light rays through all the elements of a lens. I remember talking with Michihiro Yamaki, the founder of Sigma Corporation and father of current CEO Kazuto Yamaki, about lens design in the early days. Back then, ray tracing was done by rooms full of people using mechanical calculators. A person at the front of the room would call out the steps so everyone would stay in sync with each other, passing intermediate results back and forth among them.

As NASA-level computing shrunk down to desktop size and then leapfrogged from there, optical engineers could work with much more complex designs, and try out many more combinations of tweaks and adjustments than ever before. Few people realize the enormous impact computing power had on lens designs; modern designs would have simply been impossible 20 years ago.

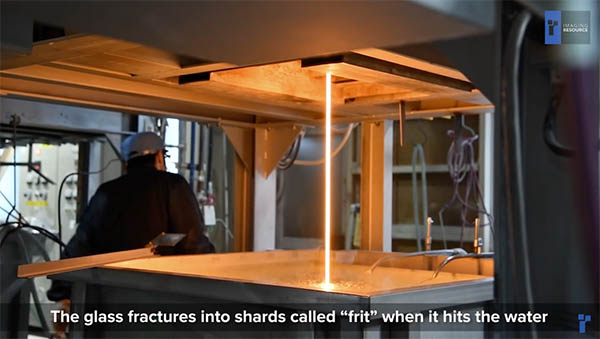

Optical glass

This is another area that's seen huge advances but most people are completely unaware of. An incredible amount of technology and black magic derived from long experience goes into making optical glass. (See my Glass for Geeks article from 2018 about Nikon's Hikari glass factory for a deep behind-the-scenes tour.)

Progress in glass formulation and manufacturing hasn't been as rapid as in some other areas, but without advances in high-index and anomalous-dispersion (ED, Super-ED, FLD, etc) glass, many modern lens designs would again be impossible.

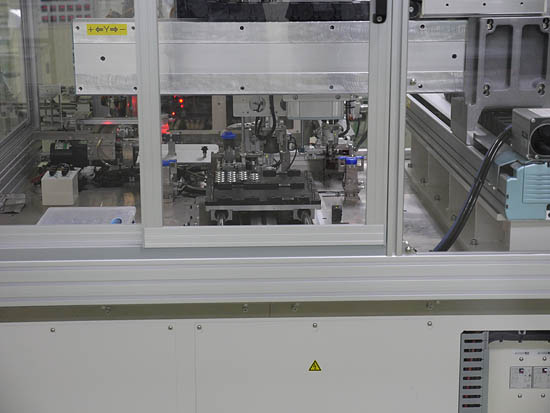

Aspheric molding

The only way to correct some types of optical aberration is to use lens surfaces that aren't portions of spheres. Aspheric surfaces are incredibly difficult to make using conventional grinding and polishing techniques. As a result, only a few, very expensive lenses could use aspheric elements. A while back though, manufacturers figured out how to mold glass to make aspheric lens elements. It's still a tricky process, involving ultra-precise manipulation of temperature and pressure to press pre-formed glass blanks into highly-polished tungsten molds. There are limitations on how large a lens element you can make with the technique, how thick or thin it can be, and even what types of glass you can use, but aspheric elements are now so affordable that most wide-angle and zoom lenses have at least one or two in them.

Optical coatings

This is the final and perhaps least-recognized factor that's led to the amazing lens designs we have today. We tend to think of anti-reflection coatings as just reducing obvious flare when we have the sun or other bright light source in the frame, but their impact goes way beyond that.

If you compare high-end lens designs from today with ones from years ago, the first thing that will leap out at you is how many elements modern lenses have in them. More elements mean the designers can optimize the designs more, doing a better job of avoiding distortion, defects like coma, chromatic aberration, increasing resolving power and sharpness, and making the lens sharp across the entire frame. Increased computing power helped make such designs possible, but reducing internal reflections between all the elements was even more important. If you tried to make a modern lens design with 20-year-old coating technology, the result would be a low-contrast, flare-ridden foggy mess. Even 10 years ago, modern lenses like the Olympus 150-400mm F4.5 TC1.25X IS PRO (with its staggering 28 elements in 18 groups) wouldn't have remotely been possible.

What does the future hold?

We've come a long, long way, but technology isn't standing still. What's next, where will we see the next breakthrough changes happening?

Sensors: Not so much

Advances in sensor technology have brought us huge gains in resolution, shooting speed and high-ISO performance since 1999, but at this point, we've ridden that train about as far as it's going to take us. We're right up against physical limits in just about every aspect of sensor performance. Advances in semiconductor processing won't bring anything in the way of higher ISO performance, because the fundamental limitation there is simply down to how many photons fall on a given area of silicon. Even entirely new sensor technologies like quantum dots would give us at most another stop of ISO, and then only at some wavelengths.

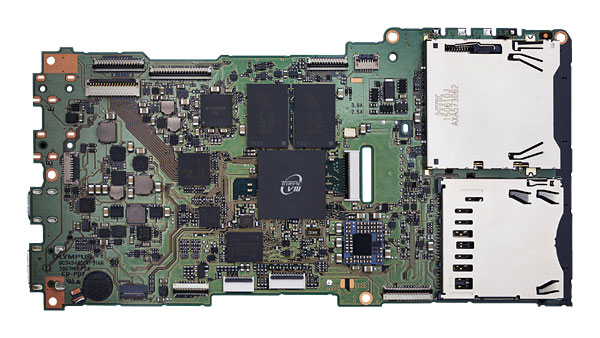

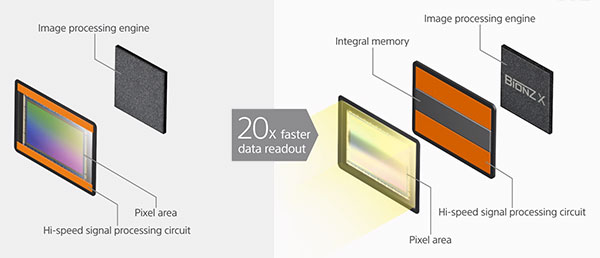

BUT, more processing directly coupled to the sensor

While basic light-gathering ability isn't going to improve much, I see a lot of promise for large amounts of processing power happening on the sensor itself, or at least in the same package. The chip-stacking technology we first saw in cameras like the Sony A9 is spreading outside Sony's semiconductor fabs, with others like Tower Jazz and Samsung developing similar abilities. There's a lot of potential here, not just for capture speed, but for low levels of autofocus algorithms and potentially even higher levels as well. Putting image processing and memory circuitry right next to the sensor pixels dramatically increases processing speed while reducing power consumption. Stay tuned, I think we're going to see some impressive developments here over the next 3-5 years.

Autofocus: Significant improvements yet to come

As good as modern AF systems are, there's still plenty of room to improve. Increasingly sophisticated AI and deep-learning-based technologies could give us pretty significant increases in AF ability, in both subject identification and tracking.

Computational photography: Lots of possible gains, especially for traditional, standalone cameras

Computational photography is a catchall term for any situation where computer processing plays a major role in creating the final image. We're just beginning to see the impact of this, with smartphones leading the way. I just got an iPhone 12 Pro Max a few weeks back and have been deeply impressed by how well it does in previous no-go areas like extreme low light, and how good it is at figuring out the correct white balance, especially in situations with mixed lighting. Most of us are likely also familiar with the artificially shallow depth of field of cell cameras' "portrait modes," overcoming the limitations of their tiny sensors and short focal-length lenses. I expect to see more of this tech come to standalone cameras, with similar improvements in image quality and color. (Especially white balance - based on what I've seen, conventional camera manufacturers need to look long and hard at what Apple's doing in this area. It's really another level from typical standalone camera performance.)

Multi-shot and high capture rates playing new roles

What I'm thinking about here is really more of a particular application of computational photography than something entirely separate. I think there are lots of opportunities for improving image quality and extending the whole photographic experience by combining sophisticated AI with video-rate image capture. A full discussion would take another whole article as long as this one, but there's an enormous amount of visual information streaming by in the real world that sufficiently intelligent cameras could do a lot with. I think we're going to see some surprising developments in this whole area, but it might take another 5-10 years for them to fully flesh out.

5G? Who knows…

This will have more bearing on cell cameras than standalone models, at least in the near term, and my crystal ball is a little cloudy as to what the actual implications will be. But increasing bandwidth and cutting latency between cameras and the cloud is bound to lead to new applications and capabilities.

Bottom line - NEVER A BETTER TIME TO BE A PHOTOGRAPHER!

That pretty well sums it up. It's incredible to me to look back over the years and marvel at just how far we've come. I started my photo hobby with a brownie box camera and darkroom in my parent's basement, now I have a pocketable or nearly-pocketable camera (depending on which one I have with me on any given day) with features and functions that would have been pure science fiction not long ago.

It's truly amazing; I feel fortunate to have lived at this particular time in history to see it all happen - and blessed to have been a part of such a wonderful industry, populated by such a universally nice group of people!

How about you? How many of you out there also remember those early days? Are there any of you who've been with us since issue 1? (If you are new around these parts, please consider subscribing to the IR Newsletter!)