Panasonic Q&A July 2022: What’s up with L-Mount, how DR Boost works, autofocus, lenses & more!

posted Thursday, September 8, 2022 at 9:11 PM EST

This is the second article I’m posting from meetings I had with photo-business executives and engineers when I was in Japan the second half of July, 2022. (My previous article covered my interview with Fujifilm in Omiya, Japan.) I was so happy to be able to return to Japan after a more than 2½ year forced absence due to COVID restrictions. It's no secret how much I love Japan, its people, culture and cuisine, but it's even better being able to connect with my friends in the industry there is even better. So it was with great pleasure that I boarded a Tokaido Shinkansen for the relaxing ~2½ hour ride to Osaka to visit Panasonic headquarters in Kadoma City.

After struggling some in their early going, Panasonic seems to have found a healthy and profitable niche in the market, leveraging the video-recording chops they perfected in their highly popular VariCam line of professional video cameras. The VariCams have become industry-standard workhorses, competing in a market where $40-50,000 camera bodies are commonplace. The sensor and processor technology know-how developed in that lofty arena has given them a leg up over most other camera makers when it comes to cramming advanced video capability into compact mirrorless bodies.

Panasonic partnered with Olympus to define and launch the compact FourThirds/Micro FourThirds standard, developing a broad line of cameras over the last decade-plus. More recently, they expanded into full-frame cameras, partnering this time with Leica and SIGMA to create the L-Mount Alliance. Through this alliance, Panasonic full-frame shooters can access an incredibly broad range of high-quality lenses, manufactured by all three companies.

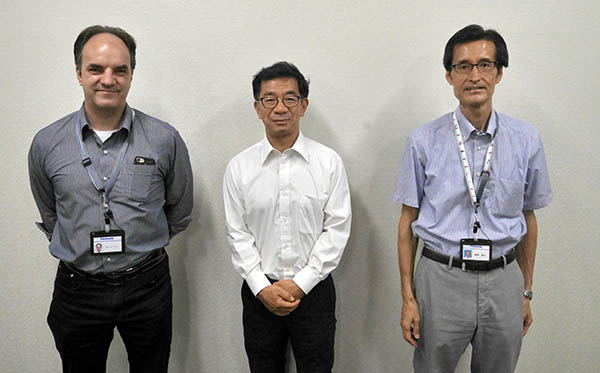

The following four executives generously opened nearly two hours in their busy schedules to meet with me:

Yosuke Yamane, Executive Vice President, Panasonic Entertainment & Communication Co., Ltd. Director, Imaging Business Unit (Center)

Marc Pistoll, Manager, Marketing Department (Left)

Akiko Fukui, Chief of Marketing Planning Section (Not Pictured)

Takayuki Takabayashi, Marketing Planning Section (Right)

It was a wide-ranging discussion, covering everything from business conditions to details of their technology, so I've included subheads below to help you quickly skim through to find the parts you're most interested in.

As usual, some parts of what's below are direct transcripts of our conversation, while I've summarized other areas where there was a lot of back-and-forth that the reader might have found confusing.

In order to make our time together more efficient, I provided a list of questions to Panasonic ahead of time, allowing Yamane-san to collect and refine answers from the engineering team. (He was apparently pretty tough on the engineers, in some cases going back to them two or three times to achieve the level of detail and clarity he wanted. Many thanks to him and all those who worked so hard to provide useful answers to my questions!)

In the below, you'll find the answers provided ahead of time indented and marked "Panasonic's prepared answer", usually followed by Q&A as I delved deeper into the topic. Explanatory text relating to the question is set in italics, while comments and analysis that I've added after the fact are set in italics and indented.

With that as background, let's dive into the conversation!

The L-mount alliance, and relationships with Leica and SIGMA

RDE: I think the biggest news in the L-mount world is the recently-announced L2 alliance between Panasonic and Leica. The press release talked about joint development, joint investment and joint marketing, and Kusumi-san (CEO of Panasonic Holdings) said “This is a major turning point that will undoubtedly lead to the development of the imaging business and especially exciting as Panasonic Holdings.” What will this mean on a practical basis, especially in terms of joint development?

Panasonic’s prepared answer

Thank you for taking this as the biggest news!

This collaboration is a collaboration between Leica and Panasonic. Until now it was a relationship in which each company provided each other's technologies and both sides used what the other had. From now on, it will change to a form of collaboration in which we create new things together.

We will invest together on key devices, introduce technology created by joint development of products [for both companies], and expand joint marketing using the same "L²" logo. In this way, we will strengthen the strategic collaboration that has greatly evolved from the previous collaboration.

Through this collaboration, we aim to enhance the competitiveness of each other's businesses and products for the future.

In the future, we will work on where to generate synergies, such as image processing engines, image sensors, artificial intelligence (AI) for image correction, and batteries. With cameras, the evolution of technology is directly linked to customer value, so it is always necessary to invest in the latest key devices. We believe that investing in key devices jointly with Leica will also lead to more efficient investment on both sides.

RDE: As a follow-up on that, where does this leave Sigma? For instance, you mentioned AI for image correction. Will that mean that a Sigma lens won’t be able to create as good a final image as one from Panasonic or Leica? (As an example, I’m thinking of the case of Micro Four Thirds, where Olympus’s Hybrid IS doesn’t work as well with your lenses, and the same for your lenses on Olympus bodies, or that Panasonic’s DFD doesn’t work as well with Olympus lenses.)

Panasonic: It’s a comprehensive agreement between Panasonic and Leica to which both companies will contribute, so we’re going to work together. This agreement won’t include Sigma, but Sigma is a very important partner in the L-mount alliance, so among the three of us, we will all work to improve the total quality of the L-mount together. The L-mount partner alliance won’t be changed; we just have an additional agreement between Panasonic and Leica.

RDE: So Panasonic and Leica cameras may be able to do more sophisticated processing than they can now, but they’ll be able to apply that same processing when shooting with Sigma lenses?

Panasonic: The contract between Panasonic and Leica is a contract to generate synergy between the two companies. The partnership with Sigma, this is the mount alliance [through which] we try to develop the L-mount product itself. So that’s the difference.

Panasonic2: Prior to the contract agreement with Leica, Yamane-san talked with Yamaki-san (CEO of SIGMA) and they agreed to jointly improve the L-mount. So the L-mount alliance will not change.

RDE: Ah, that’s a good thing for users to know, that there’s a personal relationship and there are direct conversations between Yamane-san and Yamaki-san.

In side comments, Yamane-san told me that Yamaki-san is actually a longtime personal friend of his, even outside of the business dealings they have with each other. More than any formal statements, this made me confident that we won’t see the sort of divergence we saw within the Micro Four Thirds alliance over the years. By the same token, even prior to this most recent announcement, Leica and Panasonic have been partners in the camera and lens space for many years, going back to the days of digicams. Overall, the L-mount alliance strikes me as one of the most robust I’ve seen in my years in the photo industry.

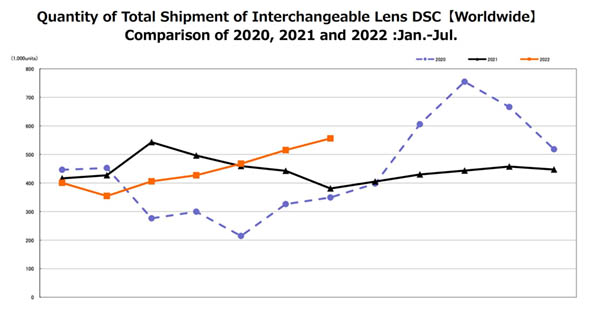

Panasonic is doing very well these days(!)

RDE: It seems like Pansonic is doing very well these days: I saw a report saying that Panasonic was the only major camera manufacturer that increased its sales in the UK from 2021 - 2022. Is that representative of the rest of the world? What’s the key to your success, and how did you turn things around from where you were several years ago?

Panasonic’s prepared answer

Our imaging business in fiscal year 2021 achieved double-digit growth and profitability.

We focused on the creators as the core target customer, and thoroughly created products that meet the needs of creators.

We also focused on marketing to our loyal customers, to expand LUMIX’s enthusiastic fans, and also let them communicate with us online about how they love LUMIX.

We have steadily promoted our activities to expand our fan base globally. As a result, the ratio of high-value-added mirrorless cameras to other models increased, leading to higher sales and profits.

In particular, the GH6, which was introduced in the 4th quarter of FY2021, realizes picture creating with a sense of 3-dimensionality and depth different from other Micro Four Thirds cameras so far and it has a slow-motion movie ability that gives a feeling of floating while still maintaining high definition, with modes such as 4K120p, FHD240p, 300p, etc. It has been very positively evaluated by creators, and sales are good.

However, the needs of creators are constantly changing. We want to develop future products while constantly thinking about what the creator wants for the future.

RDE: Double digit growth in 2021 is very impressive(!) This wasn’t part of my original questions, but how did 2020 compare to 2019?

Panasonic: We had a lot of damage [to our business volume] from COVID in 2020, but 2021 recovered significantly. This year, we have the possibility to go back to the same level as 2019. So our business is now expanding, and we hope we'll soon be back to the same level as 2019.

Panasonic2: This is because the proportion of business that’s full-frame is now increasing. That’s why our business is growing so well.

RDE: Actually, I remember Yamane-san telling me in a previous visit that it was his plan from the beginning to make full-frame cameras, and that Micro Four Thirds was a step towards that goal. So now you’re seeing the fruition of that plan.

Panasonic: Yes, our sales for the first half of this year are actually at the same level as half of 2019. If we can maintain this momentum, we think we’ll be able to do even better.

Even coming off of a down year, double-digit growth in 2021 was pretty impressive. I heard the term “creators” many times during our conversation; Panasonic has clearly identified creators as being a key market for them. This makes a lot of sense, given how incredibly strong they are when it comes to video recording technology. Their high-end VariCam cameras have long been industry standards among professional videographers, and they’ve applied a lot of that tech and know-how to their consumer-oriented S and GH camera lines.

The “creator” market is enormous, and an obvious area for Panasonic to focus on. I knew there were a *lot* of people making videos on YouTube, but had no idea of just how massive that market is until I looked up some stats while writing this article. I didn’t do any deep research, but the stats in this article blew my mind: (https://influencermarketinghub.com/creator-economy-stats/) Upwards of 50 million people consider themselves to be online creators), with 3.3 million of those viewing themselves as professionals. While the vast majority of YouTube’s 37.5 million channels don’t make enough money to reach the US poverty line of ~$12,000 annually, globally, 2 million creators earn six-figure incomes from their craft. Given all that, there are a lot of people looking for affordable cameras able to produce high quality video like Panasonic’s GH series.

Video is great and all, but what about still photographers?

RDE: You’re extremely strong in the video market, and that appears to be your main focus these days. While cameras like the GH5 II and GH6 are also excellent still cameras, their primary focus seems to be video. Actually, it could be said that all of the cameras you’ve announced in the past two years are video-focused. Given your success with video, it makes sense that you’d focus more strongly on that area, but what can you tell me about where still photography fits in your future plans? Does it still have a place?

Panasonic’s prepared answer

We position hybrid creators, who create discerning works for both still images and videos, as important customers. Therefore, we have taken on the challenge of evolution that is competitive not only with video but also with still images.

Especially when it comes to picture making, we have tried to evolve photo quality by listening to feedback from photographers all over the world, asking for things such as lively colors, three-dimensionality, depth and beautiful bokeh.

In the future, through the joint development with Leica, we will take advantage of Leica’s picture making technology and philosophy that they have cultivated over their long history.

So we will support not only video creators but also hybrid creators for impressing people with both still images and videos.

RDE: You mentioned taking advantage of Leica’s picture-making technology and philosophy. Are you referring to the sort of “Leica look” in still imagery, meaning their color management, tonality, etc, that contribute to the general look of a Leica image? Is that part of how the L2 collaboration will help with still images?

Panasonic: When it comes to still pictures, we have a particular picture-making philosophy with Lumix. This will not be changed. However we will learn lots of things from the picture-making “mind” of Leica, and a lot of specific know-how to make impressive pictures. This is the particular know-how of Leica.

RDE: So it might involve things like color management or tonal rendering, things like that?

Panasonic: We have started the discussion about joint development and [as we continue that discussion] we will specify what will be improved.

Panasonic was clear that still-image quality and capability won’t suffer as a result of their focus on video, but it’s also clear that they see a future in which, at the very least, their users are interested in both still and video capture rather than just one or the other. When it comes to the Leica relationship, the specifics of what that means (or at least what they’re willing to talk about at this point) seems a little vague. On the one hand, they clearly said that they see making “impressive pictures” as the core competency of Leica, but at the same time they pushed back against my thinking that this would have a lot to do with the “Leica look” that Leica’s fans are so enamored of. Whatever the final direction, it’s obviously very early days in the relationship, with detailed discussions of what they might do together just beginning.

Is PDAF coming to LUMIX cameras in the future? Other AF notes…

RDE: Depth from Defocus (DFD) has been Panasonic’s approach for autofocus for a number of years now. It’s performance has improved greatly over that time, but still lags behind phase-detect AF for fast-moving subjects. (That said, video focus pulls with static subjects are very smooth and sure-footed.) Is there a possibility that you might move to PDAF at some point in the future, for cameras aimed at capturing fast action?

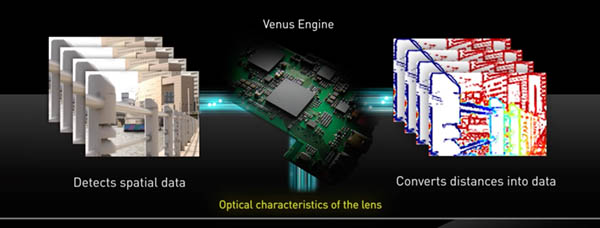

Panasonic’s prepared answer

The GH6 does not use the PD system, but the new engine's AF technology has evolved from the previous model. In particular, we have thoroughly improved the performance of object recognition algorithms. The new generation engine significantly speeds up the detection of subjects, and advances in automatic recognition algorithms have improved the accuracy of recognition systems, such as face and eye recognition, head recognition, that continues to detect people facing backward, and human body recognition, that captures even distant and small subjects. In addition, the camera automatically detects the shooting intent based on the angle of view and keeps the camera in focus while preventing missing backgrounds. Of course, the GH6’ s contrast and DFD performance for focusing speed and accuracy has been greatly improved by significantly enhancing the processing power of the new engine.

It is a fact that PDAF is one of the elements to improve the AF performance of LUMIX for the future.

However, PDAF alone does not improve AF performance, and factors other than PDAF are required to determine "which to focus on". With PDAF as an AF detection system in mind, we will continue to make every effort to improve the total performance of AF.

RDE: That’s interesting: When you say that the camera figures out the subject based on the angle to the camera, does that mean, for instance, human subjects that might be partially turned, or in some other sort of orientation? Could you explain a bit more about this?

There was quite a bit of back and forth at this point that I’ll leave out for the sake of clarity. It seems that their comment about shooting intent has to do with the sorts of images they’ll use to train their AI algorithms. We’re all familiar with the idea of training cameras to recognize people, faces, eyes, etc, but it sounds to me like Panasonic is looking to go a little deeper, using more complex scenes. I’m not 100% sure of this, but it sounded like this could extend to situations with more than one person in the frame.

RDE: I want to make sure I understand this clearly: Yamane-san said that “it is a fact that PDAF is one of the elements to improve the AF performance of LUMIX in the future.” Does that mean that we’ll be seeing PDAF in future LUMIX products?

Panasonic: We are positively studying PDAF for future products. We know that PDAF will enhance the total AF quality, so that’s why we’re actively studying it. We haven’t decided yet though.

RDE: So there’s not a specific product decision (or certainly one that you can talk about), but you’re conscious of that as something that would improve performance, and are studying it closely.

At this point, Yamane-san gestured with his hands, indicating a range for “Yes” to “No”, then said “Our position is here” with his hand more towards “Yes” than not - I joked that it looked like 82%, to much laughter :-)

This is significant, as Depth from Defocus has been a limiting factor in LUMIX performance for some time now. It’s gotten considerably better over the years, but it still doesn’t perform to the level that PDAF systems do. It would be a big step for Panasonic to move to PDAF, as it would mean entirely new sensor designs and processor architectures, and there may also be costs associated with licensing the intellectual property involved. While they’re obviously cautious about saying too much in an interview like this, I think it’s very significant that Yamane-san would go so far as to indicate a ~~80% likelihood of its adoption. I’ve heard the phrase “closely studying” from many Japanese companies over the years, and it’s often hard to tell whether that means one engineer is looking hard at it every other Tuesday, or that there’s a team actively designing a product. My interpretation here is that this much more than lip service, and that there is in fact a high probability that we’ll see PDAF in some future LUMIX cameras. The big question of course is when…

What about other subject types for AIAF?

RDE: We’re seeing AF systems from several manufacturers with special modes for identifying and tracking specific subject types. (cars, motorcycles, birds, pets, etc) Is Panasonic working on similar technology, or does it seem less important for your target markets than other features?

Panasonic’s prepared answer

Of course, we are also working on various possibilities for the future, but I feel that the subject recognition technology based on deep learning technology is a very profound field, the more we do it. Technically, it may be all about learning an infinite number of subject patterns, but the hit rate will change depending on various exposure conditions and subject distance.

We want to provide cameras that can be used in the field of professional shooting, so rather than unnecessarily increasing the number of subject patterns, first of all the accuracy of existing subject patterns (such as people and animals), meaning the recognition performance, should be greatly improved. We are working on our AF development with an emphasis on what we can do to improve that.

By doing so, we believe that we will be able to deliver useful things to our customers, not only for still image shooting, but also for videos in which the effects of misjudgment (of the subject) are [very apparent in the footage].

RDE: Ah, that’s interesting and makes sense: You’re focused on the professional market and professional shooting, so rather than adding a lot of random subject types, you want to make your AF work better and better with the kind of subjects professionals actually shoot.

Panasonic: The plan is to improve step by step. The first step is to improve the accuracy and the speed when focusing on typical subjects that professionals want to focus on. Then if we make progress on that first step, we’ll work on extending the system to other subjects.

Panasonic 2: [The first priority is] to raise the general accuracy as much as possible, that’s Yamane-san’s way of thinking. Then we will think of very specific additional subjects that we can add.

RDE: Your full-frame line in particular is catering to professional commercial photographers, and they’re not taking photos of birds, trains, motorsports; it’s more humans.

Panasonic: Exactly.

This was very interesting to me, as an example of a trend I’m seeing more and more in the industry in general. Rather than trying to make “generalist” cameras that are all things to all people, companies are identifying specific market segments where they have particular strengths or where they have a particular customer base and focusing on them. In the case of Panasonic, as we saw earlier, video is a strong core competency and a market they’ve done very well in, so they’re focusing a lot of their attention on video- and hybrid-shooters. On the still photography side of the coin, though, they recognize that the biggest customer base for their full-frame cameras are commercial photographers, who are shooting either static subjects or humans. So when it comes to AI autofocus, they’re aiming for best-in-class performance with human subjects before they consider expanding to other subject types. (Of course, as Yamane-san also noted, AF errors are starkly obvious when they occur during video recording, so their work on improving the AF systems’ discernment of shooting intent with human subjects applies to video as well.)

An affordable wide-angle zoom is coming soon for S5 users

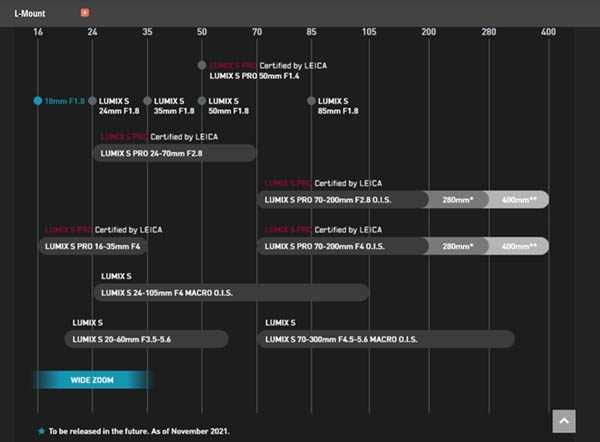

RDE: You’ve done an excellent job of building-out your L-mount S-series lens line, and have delivered almost all of the lenses shown on your lens roadmap from last November, with the exception of the fast/wide zoom. Can you share anything about what’s coming from here? When will the fast/wide zoom arrive?

Panasonic’s prepared answer

Sales of the S5, which was launched in 2020, are steadily increasing. Under such circumstances, we are strongly focusing on interchangeable lenses that can be used by hybrid creators who are targeting both still images and video with the S5.

Specifically, we have developed a kit lens of 20-60 mm, followed by a 70-300 mm, and the F1.8 single focal length series.

And now, for the next model, we are developing a wide-angle zoom in the popular price range. With this lens, the system lineup centered on S5 will be completed.

Of course, we are also considering a large-aperture wide-angle zoom, and plan to launch it at the appropriate time.

RDE: Ah, this makes sense to me, that you were concentrating on lenses for use with the S5. With that done, you’re going to go back to making more higher-end lenses again.

Panasonic: Yes, as we mentioned, you can expect this wide-angle prime lens soon.

See the next section for more on this…

RDE: Have you set any time frame for when the affordable wide-angle zoom might come?

Soon, but there’s a wide-angle prime coming first

How about the f/1.8 prime lens lineup, are we done there now?

RDE: You’ve built a beautiful line of f/1.8 lenses, ranging from 24mm to 85mm. Will we see at least one more model on the wide end, perhaps a 14 or 16mm?

Panasonic’s prepared answer

Thank you for your comment "beautiful line".

The F1.8 single prime series is a unique concept where function, performance, operability and size are all unified. This new concept has been highly praised by many creators. Specifically, we received feedback saying that the efficiency of workflows for creating works, from shooting to editing, was greatly improved with this series. We are very pleased to receive the response that we envisioned at the concept examination stage.

This series is being developed one by one to cover the focal length range used by creators. Specifically, we are now developing an ultra-wide-angle 18mm lens. While most wide-angle primes have a focal length of 20 mm as an industry trend, we will aim to further expand the expression of creators by creating a wider angle 18 mm within the concept of this series. After 18mm, we are considering further ultra-wide-angle lenses and medium-telephoto lenses as a necessary lineup for creators, so please look forward to those!

RDE: What do you mean when you say that you received feedback that the efficiency of workflows was improved? Is it that it’s very wide aperture, so it does a better job of separating the subjects from the background? I don’t understand that part…

Summarizing the reply here, what they’re saying is that the “look” (they used the word “taste” or “flavor”) of images shot with the various f/1.8 primes is very similar. This means that images and footage shot with different focal lengths can be used together without worrying that the bokeh (for instance) would suddenly look different between scenes shot with one focal length vs another.

RDE: Ah, it’s interesting that you decided to offer shooters a wider alternative to the common 20mm wide angle focal length. So next up after that will be at least one more ultrawide lens and a medium tele. Will those also be in the f/1.8 line?

Panasonic: No, not necessarily.

RDE: Is there any time frame for the 18mm? Has it appeared on a roadmap yet?

Panasonic: The 18mm is coming very soon.

They said that the 18mm will come first, the affordable wide-angle zoom mentioned above will come a bit later. As it happens, the 18mm was announced shortly before I published this article.

What are the most significant advances that led to your most recent lens designs?

RDE: I’m impressed by how much lens technology keeps evolving; even things as basic as optical glass continue to see innovation. Looking at your most recent designs, I see lots of ED, UED, UHR glass, not to mention a lot of large aspherics, aspherics made of ED glass and extreme aspheric profiles. (That is, aspherics with big differences in thickness from the edge to the center.) What are the most significant of these advances, and how did various ones enable particular Panasonic lens designs?

Panasonic’s prepared answer

As you say, the capability of lens design has dramatically improved due to the evolution of a wide variety of glass materials and lens processing technology. However, it can be said that this is also the case for other companies.

So, what makes LUMIX lenses different from other companies?

It is our product planning approach of “thorough customer perspective”, that is not bound by the “common sense” of the industry.

LUMIX has a short history as a camera manufacturer compared to other companies, which is why it can be said that it is possible for us to create lenses based on "crazy" ideas that are not bound by the common sense of the industry. The various new glass materials support the realization of such crazy lenses, as well as our innovation of lens processing technology.

Furthermore, it is not just about creating lenses with unprecedented specifications, but what is “crazy” is that the specification decisions come from a thorough customer perspective. Our lens engineers even go to the actual creators' sites, to see needs that the creators themselves do not notice, and incorporate them into various specifications to create innovative lenses that other companies do not have.

The most recent example is a 20-60mm standard zoom lens (full-frame) with an unprecedented wide-angle 20mm start, functions and performance. We have brought to market unique lenses based on our complete focus on the customer's point of view, such as the series of primes with unified size and weight, and the 9mm (Micro Four Thirds), which has a large aperture and ultra-wide angle but also supports macro photography. It has been very well received. [The 9mm f/1.7 focuses down to just 0.31 inches or 9.5mm from the front element, very close indeed.]

In this way, we will continue to create innovative lenses that will support creators.

RDE: So a main point is that LUMIX lenses aren’t bound by conventional wisdom. It’s also interesting to me that the lens designers visit creator sites.

Panasonic: Yes, very frequently.

RDE: I don’t know if other companies’ designers visit creator sites or not, but this is the first time I’ve heard of it. (Although I do know that many of the SIGMA designers are photographers themselves, this is the first time I’ve heard of lens designers visiting end-user sites.)

Panasonic: Comparing with SIGMA, their history is different. They have a history of over 50 years, and we have just a 20 years history. And recently, we started targeting creators, so we have lots of things to understand. So that’s why the optical engineers try to get feedback from creators.

I was asking a favorite question of mine here, wondering what technical advances not available even a few years ago allowed them to create some of their latest designs. Few photographers realize just how much lens technology has advanced in the last 5-10 years. What I got as a reply though, was that Panasonic views their focus on customer needs as much more important than any specific technologies they might employ to do so. This singular focus on end-user needs came up over and over again in our conversation. Any successful company naturally pays attention to their customers, but under Yamane-san’s guidance, Panasonic is doing so more than most.

How are things going with the video-focused GH Micro Four Thirds line?

RDE: How is the GH5 II selling, now that the GH6 is out? While the GH6 is $500 more expensive, you get an awful lot more video capability for the extra cost; I’m thinking that a lot more people will go for the GH6 than the GH5 II, is that the case?

Panasonic’s prepared answer

When considered by video creators, the GH6’s $500 price premium is evaluated based on its high-speed video processing capability from the new-generation engine, higher image quality, immersive slow-motion quality and its ability to handle all codecs. So the GHs have been doing well.

The GH5Ⅱ is also very popular. The ways it evolved from the GH5, such as LIVE streaming function, C4K60p 10bit recording, small size and the good mobility of the Micro Four Thirds format have been strongly supported by YouTubers who want to create and distribute video easily.

And while keeping the same housing and button layout as the GH5, it is equipped with a new menu system that we developed for S-Series for easier operation, so customers who are familiar with GH5 can easily upgrade their camera from a GH5 to the GH5Ⅱ.

We hope that a wider range of creators will use the GH6 and GH5II, while taking advantage of the features of Micro Four Thirds and the segregation between the two models.

RDE: Ah, so I think the comment is that the GH5II has the same form and button layout as the GH5, but it has the S-series menu system, so GH5 users can easily upgrade; it has the same body but a different menu system, ne.

RDE: So despite my comment in the question, the GH5 II is selling very well also; both the GH5 and GH6 are both selling well. Do you have any approximate idea of where they are relative to each other?

Panasonic: Between the two, the GH6 is selling more than the GH5 Mark II. [This is probably] because when we announced the GH5 Mark II, we made a development announcement for the GH6 as well, so the customers could get the right product from the GH series. So some people wanted to wait for the GH6 to come.

My interpretation here is that they’re attributing some of the higher sales of the GH6 to pent-up demand from people who decided to hold out and wait for it vs going with the GH5 II when it was released.

RDE: It’s an over-simplification, but you could say that the GH5 Mark II is more for creators, the GH6 is more for videographers.

Panasonic: Yes, our customer base is as you said, the GH6 is more for professionals, the GH5 II is more for creators. This is what we intended for the segmentation between these two models.

RDE: I think it was a very positive move on Panasonic’s part, to announce both at the same time. As you said, then people could decide what they want, and I think that kind of openness helps them trust Panasonic more when it comes to your product plans and how to plan their purchases.

Panasonic: Also this helps expand the customer base. We’d like to reach as many customers as possible. While we generally don’t disclose our basic strategy, that’s why [expanding the customer base] we made both announcements at the same time.

Did the pre-announcement of the GH6 hurt GH5 II sales?

RDE: As just noted, I think that announcing the GH6 at the same time as the GH5 II was a very positive step, but do you think it’s hurt the sales of the GH5 II at all? Do you think you’re seeing more overall sales across the two models combined as a result of this strategy?

Panasonic’s prepared answer

At the time of the GH5Ⅱ introduction, we made a development announcement of the GH6, so that our customers could understand the concept of the long-term evolution of the GH series so a wide range of customers would trust the GH series and use it for a long time.

We announced two models at the same time, thinking that we can help a wider range of creators by providing the GH6 for full-scale video production including cinema and the GH5Ⅱ for customers who want to easily distribute high-quality videos online.

This open communication has given a very positive impression to many customers. We received feedback that customers were able to firmly select the GH series model that they wanted, for example customers who waited for the GH6 to purchase or customers who evaluated the the unique merits of the GH5Ⅱ and purchase the GH5Ⅱ.

Who was the “unusual” developer of the GH6?

RDE: The GH6 seems to be a huge success. Yamane-san mentioned in an interview I saw online somewhere that it had an “unusual” developer, and that the same developer would be behind the next S-series camera. How is the developer unusual, and what has his history been with Panasonic, in terms of other products he worked on?

Panasonic’s prepared answer

Thank you for remembering the "unusual" developer.

Actually, the unusual developer is not just one person. As Panasonic handles a wide range of products from B2C to B2B (Business to Consumer and Business to Business), our “unusual” developers have experienced many business categories and many different job functions. Taking advantage of this diverse experience, they have participated in both full-frame and Micro Four Thirds series design, and have realized many ideas for functions that I could not have imagined. In addition to those, members with various backgrounds have worked together to develop the GH6. I believe this diversity is the strength of LUMIX.

RDE: Ah, so the “unusual” developer wasn’t one person, it’s a group.

Panasonic: Yes, the diversity in terms of many different people, coming from many different sections, different sectors of the business, with different backgrounds, that is a very good mixture to create new ideas, and not go only the conservative path.

What happened to 6K Photo?

RDE: Why were 6K photo modes removed from the GH6? I guess they’re more for still photographers, but it seems like including them wouldn’t have any downside - or would it?

For the non-Panasonic shooters in the audience, 4K/6K Photo were continuous-shooting modes that used the cameras’ video-capture capabilities to effectively let you take very high frame-rate still images. The 4K/6K designation refers to the horizontal resolution of the captured images; 4K corresponds to typical 4K video or cinema pixel dimensions, while 6K images were 50% larger. The camera would basically grab a 4K or 6K video stream optimized for still-image extraction, but nonetheless saved on the memory card as a .mp4 file. You could then scroll through the captured video and choose the image(s) you wanted to save as JPEGs. Especially when it was first introduced, this gave way higher frames/second continuous “still” shooting than other manufacturers offered, but as noted below, it did require an extra step to extract the still photo you wanted, and the .mp4 encoding resulted in lower image quality than photos shot as still images from the get-go provided. This feature was dropped from the GH6.

Panasonic’s prepared answer

"Capture the decisive moment, utilizing the technology we have cultivated in video to allow high-speed continuous shooting." -- this was the idea behind 4K Photo, first realized with the GH4. Since then, it evolved into 6K Photo with the GH5. But the feedback from customers was that 4K and 6K Photo have the weakness that they require one additional step to cut out one frame of image from the video, so as a result the usage was limited.

We have had many internal discussions, and we eventually agreed that full-pixel ultra-high-speed electronic shutter continuous shooting should be the ultimate goal. From that aspect, ever since the G9, we have been actively working on the realization of ultra-high-speed full-pixel continuous shooting with an electronic shutter. In that direction, we equipped the GH6 with 75fps SH continuous shooting, so we decided to take out 6K photo mode in order to avoid complications due to the provision of many similar functions.

However, we are also hearing the voices of customers who are demanding the revival of the value-added functions that 4K/6K Photo has had so far. For example, continuous pre-shooting. Consideration has already begun on how to realize this for the future. We will continue to listen to customer feedback and consider the optimal provision method.

“Continuous pre-shooting” above means the camera begins continuously capturing frames before the shutter button is pressed, then continuing to capture for a little while after the shutter trigger. This is extremely helpful when you’re trying to get *just* the right moment, as a bat or a player’s foot hits a ball, a horse goes over a jump, etc. This was part of the 4K/6K Photo feature that isn’t present in the GH6. Panasonic is considering how to add that feature back in. I didn’t ask, but expect that this would be a feature for future cameras, vs being able to be added to the GH6 via a firmware update.

The GH6’s sensor has a LOT of bit-depth, will we see that in future LUMIX models too?

RDE: The GH6 appears to have a very advanced ADC; does the ADC itself have a resolution of 14 or 16 bits? Will we see this technology in other cameras going forward?

Panasonic’s prepared answer

I cannot disclose the specifications of the image sensor in detail, but it does have a 16-bit capability, although it varies depending on the camera mode (still image or video mode).

This is the technology that we have been developing with great intention to realize Micro Four Thirds dynamic range with almost the same level as full frame in the development of the GH6. I can't tell you about the products in the future, but since the launch of the GH6, it has been very well received by customers, and I would like to enthusiastically explore the possibilities about the future.

Bit depth refers to the number of levels of brightness the A/D (analog/digital) converters in a camera’s sensor can distinguish. Each additional bit doubles the number of levels, or the resolution of the converter. (Not to be confused with image resolution; we’re now talking only about the conversion of pixel voltages to numbers to be sent to the image processor.) An 8-bit A/D converter can resolve 256 different brightness levels, a 9 bit could resolve 512 levels, and so on. GH6’s sensor chip has 16-bit A/D converters, meaning they can measure 65,536 different brightness levels. (AFAIK, most modern camera sensors have 12-14 bit converters; I think skewing towards the 14-bit end of the scale.)

Bit depth translates directly to dynamic range, or the range between the smallest change in brightness and the highest absolute brightness a sensor can measure - with an important caveat. That caveat has to do with how much noise there is in the system; the more noise there is, the less dynamic range you have. You can think of it like trying to hear a conversation with traffic noise in the background. A conversation you could make out clearly in a perfectly quiet room wouldn’t be audible at all at a busy intersection.

All this is just to point out that having a 16-bit A/D converter doesn’t necessarily mean you’re going to get 16 bits of dynamic range. That said, though, if you do a good job designing your sensor pixels and readout circuitry, you may be able to get reasonably close. If everything else between the two were kept the same, the larger pixels of a full-frame sensor would generally have lower noise levels than the smaller ones on a Micro Four Thirds chip. Nonetheless, the GH6’s 16 bits of brightness resolution is a big help towards extracting the maximum dynamic range possible from its pixels.

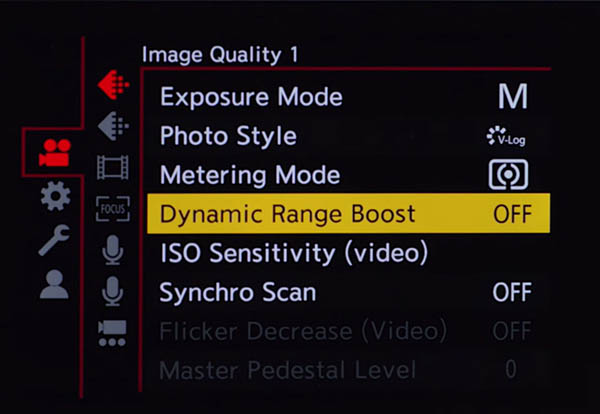

How does the GH6’s DR (dynamic range) boost mode work?

RDE: The GH6’s DR Boost mode sets the native ISO to 2000, and apparently works very well. I’m curious how this is implemented: Is there any amplification used in the readout process (such as a smaller readout capacitor, or an amplifier in the signal path), or is it just a matter of taking advantage of the unusual bit depth of its ADC?

Panasonic’s prepared answer

Dynamic range boost is an elemental technology in the image sensor that I mentioned earlier, and each pixel has two output systems, a "low ISO circuit that can obtain high saturation" and a "high ISO circuit that can obtain low noise characteristics".

It’s similar to the "dual native ISO technology" of GH5S and S1H, but with GH6, it has evolved one step further.

Specifically, these two output images obtained by a single exposure can be optimally combined and output for each pixel.

This makes it possible to obtain smooth, wide dynamic range images with rich gradation while suppressing noise.

RDE: Ah, that’s very interesting. So there are two entirely separate readout circuits for each pixel, and you can collect data from both of them at the same time? Or is it that the entire sensor is being read out twice, once with the low sensitivity, once with the high sensitivity circuits? - In dynamic range boost mode, it sounds like the entire sensor is read out twice, once at low ISO, once at high ISO. Is that true, it’s read out twice and the results are combined?

Panasonic: Yes, that’s right. DR Boost reads out the low ISO and high ISO, and then combines them into one value for each pixel.

This was very unexpected, and I’m not sure how they might have implemented it at a pixel level. Regardless, it’s interesting that they actually read out the entire sensor twice in DR Boost mode, and then the camera’s processor combines the two images together into a final result. I don’t know if anyone else does this, but this is the first time I’ve heard of the approach. It obviously relies on very fast sensor readout, and a powerful image processor to combine the images.

Why no DR Boost for 4K/120p video?

RDE: Why is the DR Boost mode not available for 4K/120 video? Is it a matter of needing to run the ADC at lower bit depth to get that frame rate? [I didn’t know what the answer to the previous question would be when I submitted this one before our meeting.]

Panasonic’s prepared answer

As explained above, Dynamic Range Boost combines two output images obtained with a single exposure and outputs them as a single wide-gradation image. But it was not possible within the timing of GH6 to reach a video frame rate exceeding 60p, 100p or 120p class while fully scanning the image sensor.

Conversely, it can be said that this is the way to grow in the future. We would like to continue to evolve in the future, so please look forward to it.

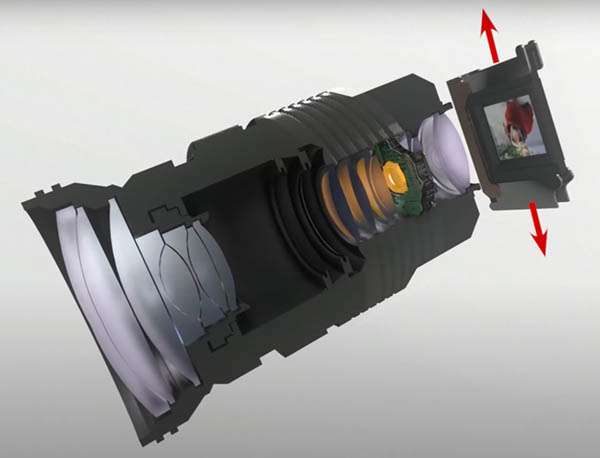

How did they manage such a big step up in IS performance?

(For a deep inside look at how IS works, check out my "Geek's Tour of Image Stabilization".)

RDE: The GH6 also has a very high-performing in-body image stabilization system (7.5 stops). What was the big advance that let you achieve such a significant step up? Is it mainly down to having a better accelerometer chip than was available previously?

Panasonic’s prepared answer

Strictly speaking, it is supported by two factors.

One is "improvement of blur detection accuracy". As you said, the new adoption of an ultra-high-precision gyro sensor and a newly developed algorithm to make full use of its performance have resulted in a significant increase in performance.

In addition, we have incorporated new ideas into "improving the accuracy to move the image stabilization unit". Specifically, we reviewed the magnet configuration, the mechanical configuration of the position sensor and the operation algorithm.

Also the operation accuracy of the image stabilization unit itself has been improved.

Through these efforts, we have been able to achieve 7.5 steps when shooting still images, and image stabilization performance that excellently distinguishes between panning and blur when shooting videos.

It was a combination of essentially everything that makes up an IBIS system: They’re using a very high-precision gyro to measure camera shake, they improved the accuracy of the electromechanical system that moves the sensor and developed a new algorithm to control it all that’s optimized for the new configuration.

Why is the GH6’s IBIS system 2x (1 stop) better than that in the S5?

RDE: The S5’s IBIS is “only” good to 6.5 stops of improvement. What’s the main reason for the difference between the S5 and GH6? Is it mainly the difference in sensor size, or were other factors (like the specs of accelerometers that were available when it was being designed?

Panasonic’s prepared answer

Certainly, there are differences in the IBIS mechanism due to the difference in sensor size, but it is mainly due to the difference in the gyro sensor used and the blur control algorithm optimized for that gyro sensor.

RDE: Ah, so it’s mainly a limitation of the gyro sensor. And the blur control is optimized as appropriate for each sensor.

Panasonic: That answer; it’s true that the big difference is the gyro sensor itself. However, the engineering mind may say that there are many factors involved. So this is the official answer, but the engineers might have a different answer.

RDE: Ah, of course. There are or course many aspects involved, so the answer about the gyro being the specific limit with the S5 doesn’t mean that it’s that big a limitation vs the rest of the system. It’s not like the rest of the system is capable of 7.5 stops but the gyro is limiting it to 6.5. The gyro may be the limiting factor, but only by a little bit.

Panasonic: Yes.

Why is the S5’s rolling shutter worse when shooting stills vs video?

RDE: The S5’s rolling shutter is much more pronounced when shooting stills with the electronic shutter than when recording even 4K full-frame video. Why is this? I’d think that the sensor-scanning rate for stills would be as fast as for video.

Panasonic’s prepared answer

This is because different image sensor drive methods are used depending on the mode in the camera. The electronic shutter does not necessarily mean that the scanning speed of still images and movies is the same, and the optimum image sensor drive method is used according to the shooting mode. I can't tell you the details, but for example, still images use a drive that emphasizes image quality, and video uses a drive that emphasizes higher speed.

RDE: Ah, that makes sense; the requirements for generating a video signal are different than what you need or want for a still image, so you … you scan the sensor differently, depending on whether you’re capturing still or video, and that is why rolling shutter is greater when shooting stills.

Panasonic: In order to capture a still picture, single-frame picture quality is very important, so in order to improve the single-frame performance, the drive method is focused on still picture making. And video is different, it’s not frame by frame.

RDE: Ah yes, in video, especially when you’re encoding it, you’re looking at the previous frames and the next frames, and when you’re doing that, you can do noise reduction across multiple frames - but when capturing a still image, that one frame is all you have to work with, so you need to do everything you can to maximize the quality of that single image.

How did you achieve the lower shutter shock in the GH6?

RDE: I haven’t tested it myself, but I’ve heard that shutter shock is noticeably reduced in the GH6. How was the shutter mechanism designed to accomplish this reduction?

Panasonic’s prepared answer

Regarding the quietness of the mechanical shutter mechanism, we believe that over the long-time history of mirrorless camera development, we accumulated the know-how to realize it. I can't reveal the details, but I think that not only the GH6 but also the S5 are good at quietness. On the other hand, in a comparison between the GH6 and S5, the difference in imaging area is about 1/4, so there is a considerable difference in shutter impact energy, so I think that you may have seen a significant reduction. I think that you may have reaffirmed that the Micro Four Thirds system has advantages not only in terms of mobility, small size and light weight, but also in this quietness.

Panasonic: Which model were you comparing it to in this question?

RDE: This was based on either a comment or a mention in marketing material; I’m not sure of the source, but somehow I had it in my mind that it had been reduced, presumably from the GH5.

Panasonic: We have constantly tried to improve shutter shock. It’s directly related to image stabilization, so this is a kind of a tradeoff. Our philosophy is that we make… we reduce the shutter shock, which might be related to the shutter speed. So speed vs stabilization, it’s a kind of a tradeoff. So we made an optimum balance between these two. That’s our philosophy on the shutter, we are making continuous improvements.

RDE: So the shutter mechanism itself is different than in previous cameras, to reduce the shock?

Panasonic: Honestly speaking, that’s not a change.

RDE: But the IS system is able to react quickly enough to reduce the effect?

Panasonic: The shutter itself is the same, but in order to reduce the shutter shock’s effect on the image, the mounting of the shutter has been improved from the GH5.

RDE: Ah, so some of it’s how the shutter mechanism is suspended within the body. But it is true that the IS system is able to react quickly enough to the vibration from the shutter to be able to stabilize the image?

Panasonic: In the past, for the SLR cameras (Nikon, Canon, etc) companies were very keen about the sound of the shutter itself. The photographers were very loyal, they felt very good about the sound of the shutter. But we are now focusing on reducing the [vibration] to improve the image with stabilization. But maybe in the future, we will have to consider the sound of the shutter, so that photographers will be happy when they shoot photos.

RDE: Maybe that’s the next thing; instead of custom ringtones on your phone, you’ll be able to have custom shutter sounds on your camera. So on my camera I’ll be able to have it make a Canon, Nikon or Leica shutter sound. <much laughter>

Panasonic: Although Nikon stuck to the sound and the feeling of the shutter for a long time, finally with the Z9 they took a different direction.

RDE: Ah yeah, they went all-electronic.

RDE: So if the IS system can compensate for shutter shock, is it possible that even when the IS feature is turned off, that it would compensate for the shutter shock, or do you have to have IS turned on?

Panasonic: Yes. [When the IS is off, it’s off; you need to turn it on if you want it to compensate for shutter shock.]

I found it interesting that the GH6 actually uses the same shutter mechanism as the GH5 did - so the improvement in shutter shock is all down to (a) the mounting arrangement that holds the shutter mechanism in place within the body, and (b) the IS system being able to do a better job of compensating for it.

My perhaps odd-seeming question about whether the IS system would correct for shutter shock even when the IS system as a whole was turned off came from me wondering whether there might be a mode in which it would look only for shutter vibration, and only compensate for that, vs normal camera shake. I would expect shutter shock vibration to be at a much higher frequency than normal hand shake.

Summary

This was yet another interesting conversation with Yamane-san and the team at Panasonic; sincere thanks again to Yamane-san and the engineers for preparing such excellent answers to my questions ahead of time: They enabled me to go into much greater depth than I would have been able to otherwise. I learned a lot about a wide range of topics, and am looking forward to my next visit whenever I can arrange it!